1. Introduction

Genetic epidemiology studies how genetic factors determine health and disease in families and populations and their interactions with the environment. Classical epidemiology usually studies disease patterns and factors associated with disease etiology, with a focuson prevention, whereas molecular epidemiology measures the biological response to environmental factors by evaluating the response in the host (e.g., somatic mutations and gene expression) [1].

Interest in how the environment triggers a biological response started in the mid-nineteenth century, but approximately 100 years passed until epidemiologists and genetic epidemiologists had adequate analytical methods at their disposal to understand how genes and the environment interact [2]. The beginning of genetic epidemiology as a stand-alone discipline started with Morton in the 1980s with one of the most accepted definitions: “a science which deals with the etiology, distribution, and control of disease in groups of relatives and with inherited causes of disease in a population” [3]. However, epidemiology is clearly a multidisciplinary area that examines the role of genetic factors and environmental contributors to disease. Equal attention has to be given to the differential impact of environmental agents (familial and non-familial) on different genetic backgrounds [4] to detect how the disease is inherited, and to determine related genetic factors.

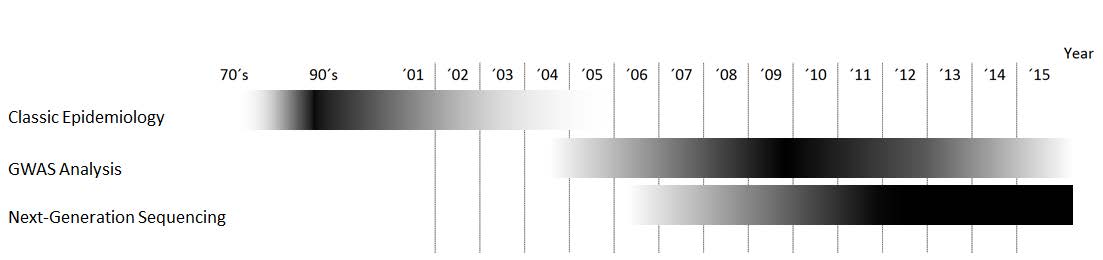

With advances in molecular biology techniques in the last 15 years, our ability to survey the genome, give a functional meaning to the variants found, and compare it among individuals has increased dramatically [5]. Although there is still a long way to go to fully understanding rare diseases and how genetic variability influences phenotype, these technological advances allow more in depth biological knowledge of epidemiology [6] (Figure 1).

Here, wepresent an overview of approaches in genetic epidemiology studies, ranging from classical family studies/segregation analysis and population studies tothe more recent genome-wide association studies (GWAS) and next-generation sequencing (NGS), which have fueled research on this area by allowing more precise data to be obtained in less time.

2. Classical epidemiology

Genetic epidemiology was born in the 1960s as a combination of population genetics, statistics and classical epidemiology, and applied the methods of biologicalstudy available at that time. Generally, the studies included the following steps:establish genetic factor involvement inthe disorder, measure the relative size of the contribution of the genetic factors in relation to other sources of variability (e.g., environmental, physical, chemical, or social factors), andidentify the responsible genes/genomic areas. or that, family studies (e.g., segregation or linkage analysis) or population (association) studies are usually performed. Approachesinclude genetic risk studies to determine the relative contribution of the genetic basis and ambience by utilizing monozygotic and dizygotic twins [7]; segregation analysesto determine the inheritance modelby studying family trees [8]; linkage studies to determine the coordinates of the implicated gene(s) by studying its cosegregation; and association studies to determine the precise allele associated with the phenotype byusing linkage disequilibrium analysis [9].

2.1. Genetic risk studies

Genetic risk studiesrequire a family-based approach in order to evaluate the distribution of traits in familiesand identify the risk factors that cause a specific phenotype. Traditionally, twin studies have been used to estimate the influence of genetic factors underlying the phenotype by comparing monozygotic (sharing all of their genes) and dizygotic (sharing half of their genes) twins. In order to standardize the measurement of similarity, aconcordance rate is used. Monozygotic twins generally being more similar than dizygotic twins is usually considered evidence of the importance of genetic factors in the final phenotype, but several studies have questioned this view [10]. Importantly, twin studies make some preliminary assumptions, such as random mating, in which all individuals in the population are potential partners, andthat genetic or behavioral restrictions are absent, meaning that all recombinations are possible [11]. win studies also assume that the two types of twins share similar environmental experiences relevantto the phenotype being studied [12]. Concordance rates of less than 100% in monozygotic twins indicate the importance of environmental factors [13,14].

2.2. Segregation analysis

The objective of segregation analysis is to determine the method of inheritance of a given disease or phenotype. his approach can distinguish between Mendelian (i.e., autosomal or sex-linked, recessive or dominant) and non-Mendelian (no clear pattern [15]) inheritance patterns. For the non-Mendelian patterns, factors interfering with genotype-phenotype correlation, such as incomplete penetrance, variable expressivity and locus heterogeneity, and the variable effect of environmental factors can complicate the segregation analysis [16]. Thus, families with large pedigrees and many affected individuals can be particularly informative for these studies [17].

2.3. Linkage studies

Linkage studies aim to obtain the chromosomal location of the gene or genes involved in the phenotype of interest. Genetic Linkage was first used by William Bateson, Edith Rebecca Saunders, and Reginald Punnett, and later expanded by Thomas Hunt Morgan [18]. One ofthe main conceptsinlinkage studies is the recombination fraction, which is the fraction of births in whichrecombination occurred between the studied genetic marker and the putative gene associated with the disease. If the loci are far apart, segregation will be independent; the closer the loci, the higher the probability ofcosegregation [19,20]. Classically, the percent of recombinants has been used to measure genetic distance: one centimorgan (cM), named after the geneticist Thomas Hunt Morgan, is equal to a 1% chance of recombination between two loci. ith this information, linkage maps can be constructed. A linkage map is a genetic map of a species in which the relative positions of its genes or genetic markers areshown based on the frequencies of recombination between markers during the crossover of homologous chromosomes [21]. The more frequent the recombination, the farther both loci are. Linkage maps are not physical maps, but relative maps. Translating the me

asure into a physical unit of distance, 1cM is approximately 1 million bases [22].

Linkage analysis is based on the likelihood ratio, also called the logarithm of odds (LOD) score, which is the statistical estimate of whether two genes are likely to be located near each other on a chromosome and, therefore, their likelihood of being inherited together. This analysis can be either parametric (if the relationship between genotype and phenotype is assumed to be known) or non-parametric (if the relationship between phenotype and genotype is not established) [23].

2.4. Association studies

Association studies, which are frequently mixed up with linkage studies, focus on populations. This approach tests whether a locus differs between two groups of individuals with phenotypic differences. The loci are usually susceptibility markers that increase the probability of having the phenotype or disease, but for which there is not necessarily linkage, as it can be neither necessary nor sufficient for phenotype/disease expression [24]. Due to the increased number of individuals in such a study, the statistical power of this approach is greaterthan that of linkage analysis andmore prone to detect genes with a low effect on the phenotype [25].

3. Molecular epidemiology

Although the current approaches to epidemiology studiesrelyonthose discussed above, advances in biotechnology have brought significant changes to genetic epidemiology and how these studies areperformed. Technological improvements have accelerated data gathering and interpretation [5], broadening our understanding of disease etiology.

3.1. GWAS analysis

In the last 10 years, GWAS have transformed the world of genetic epidemiology, with a large number of research studies and publications on complex diseases, allowing the identification of a great number of phenotype-associated genomic loci [26]. ypically, linkage studies in combination with information from family pedigrees are used to broadly estimate the position of the disease-associated loci [27]. With the advent and popularization of array technology, GWAS have become a widespread tool for genetic epidemiology studies. This approachallows the simultaneous and highly accurateinterrogation ofmillions of genomic markers at a reasonable cost and speed. The first GWAS was published by Klein et al [28], and to date more than 2000 articles have been published based on this methodology [29]. These studies allow the determination of thousands of disease-associated genomic loci, which could serve as risk predictors if a large enough discovery sample size is provided [30]. In addition, these dense, genome-wide markers allow a reasonable approximation to understand narrow-sense heritability [31].

In order to find a genetic association with a given phenotype, GWAS need the effect of the variant(s) to be notorious and/or to have strong linkage disequilibrium with previously genotyped markers [32]. GWAS are mostly useful under the common-disease common-variant hypothesis [33]. Therefore, this approach may not be adequate for some common diseases for which rare variants with additive effects are the underlying mechanism [34].

GWAS have been useful forobtaining genomic information about the basis of several diseases, but they have some limitations. First, as genetic markers are only being surveyed in this approach, it is difficult to interpret the results, partially due to our current lack of understanding of genomic function. The use of non-random associations of variants at different loci (i.e., linkage disequilibrium) as a correlation tool also impacts the interpretation of results [35]. GWAS identify blocks of variants, not necessarily the real functional variants [36]. Second, and related to the first point, we miss part of the heritability because of a gap between the variance explained by the significant single nucleotide polymorphisms (SNPs) identified and the estimated heritability [37]. This could be explained, at least partially, bythe limited info obtained from the genome by GWAS. Small insertions and deletions, large structural variants, epigenetic factors, gene interactions, and gene by environment interactions could be playing a role in that [38,39,40].

3.2. Next-generation sequencing

In the last 8 years, the advent of NGS has helped fill the gap in understanding the genome. As with sequencing each individual base is interrogated, it may help in screening rare variants.

NGS promises great opportunities for finding the answers to questions raised byarray technology, as it has the potential to provide additional biological insight into disease etiology. As we move into an era of personalized medicine and complex genomic databases, the demand for new and existing sequencing technologies is constant. Although it is not yet possible to routinely sequence an individual genome for $1000, novel approaches are reducing the cost per base and increasing throughput on a daily basis [41,42]. Moreover, advances in sequencing methodologies are changing the ways in which scientists analyze and understand genomes, whereas the results that they yield are being disseminated widely through science news magazines [43].

Advances in knowledge on the genetic basis of pathologies have changed the way in which such entities are understood. Thus, diseases have gone from being individual-specific to a familial phenomenon in which genetic alterations (mutations) can be genealogically traced to the molecular level.

NGS can be used to identify several types of alterations in the genome, the most common of which are SNPs, structural variants, and epigenetic variations on very large regions of the genome [44,45,46,47,48]. Because of the capacity of NGS to detect many types of genomic and epigenetic variations on a genome scale in a hypothesis-free manner with great coverage and accuracy, it is starting to explain the missing heritability gap left by GWAS [37,49]. With these tools, it is currently possible to obtain a more comprehensive view of how phenotypic variance works in genetic epidemiology.

NGS allows researchers to study all of the SNPs in each individual directly [50]. This is a large amount of information, which requires large data analysis resources. Inwhole genome and whole exome analysis, the number of rare variants that is revealed can be overwhelmingly large. Most of these variants have no known functional relevance. Therefore, it is not yet easy or straightforward to filter and identify the causal variants, even after accurate variant calling has been performed. Targeted resequencing of candidate genes could be a feasible optionfor avoidingthe high number of variants obtained by whole genome and whole exome sequencing in cases in which there is already a strong knowledge basis regardingphenotypeetiology, but the number of genes is still large for traditional Sanger sequencing [51,52,53]. This type of study significantly reduces analysis costs, as samples could be multiplexed for the analysis, and simultaneously reduces the number of variants found in the regions of interest. Therefore, the analysis will be comparatively easier and the amount of information given per individual less, though more focused on targeted genes. Another option would be to sequence family trios in order to allow filtering of shared variants and speed up the identification of de novo mutations on the affected individual [54,55,56,57].

Thus, NGS can be applied to the study of both rare and common diseases. For rare monogenic diseases, genes can be directly sequenced and variants identified with a small sample size [58,59,60]. Depending on the genetic heterogeneity, finding the involved allele could still be challenging. Rare diseases are usually identified by symptoms, which could be shared by completely different diseases, as the mechanisms underlying the phenotype could be different. This is one of the most difficult points when analyzing rare diseases with genetic heterogeneity. For these cases, larger sample sizes are usually required in order to find the genomic loci implicated in the phenotypeetiology [6,55,61,62,63,64]. Time of appearance and disease severity are often ruled by the residual enzymatic activity of mutated proteins and the influence of the individual genomic background. Therefore, the type of causal variants could be diverse (e.g., coding, splicing, non-coding, missense, epigenetic alterations), as well as the influence on final protein activity. To make it even more complex, those alterations could be shared between individuals with different phenotypesdepending on the penetrance of the variant, background, or environment [65].

3.3. Functional annotation

As advances in technology imply generating a larger amount of data, genomic annotation is crucialfor variant prioritization and the interpretation of results. With theuse of adequate tools, random and systematic noise, false positives, and false negatives can be reduced, easing the final analysis. Study design can also influence the analysis, as it is a compromise between the amount of data to be generatedand the scope of the study; whole genome sequencing is expected to provide hundreds of thousands of variants, most with yet unknown significance, in intronic or non-coding regions. Whole exome sequencing will still result in a large number of variants, but the annotation of exonic regions is much more curated than that of intronic regions. In the case of a gene-panel targeted study, the list of variants could be reduced to several hundred, depending on the number of genes included, making the analysis and filtering easier, but the data will be limited to the previously selected genes.

The Human Reference Genome established in 2001 [66,67] and the achievements of large sequencing projects such as the 1000 Genome Project [68] are catalyzing advances in human genetics. Large samples obtained with these projects allow adequate statistical power to shed light into rare variant effects [6,64,69] and empower the usage of analysis tools for automatic variant annotation.

Methods for variant analysis and effect prediction have been developed in order to speed up this process. A complete list of software and tools is available online [70]. These methods focus mostly on coding regions in the human genome. Although 98% of the human genome is non-coding [71], these regions are less well known [72]. Thus, there are annotation tools extending the scope to the non-coding and regulatory areas, such asHaploReg [73], RegulomeDB [74], CADD [75], VariantDB [76], GWAVA [77], and ANNOVAR [78], among others [79]. However, the final judgment regarding potential variants is in the hands of the user.

Large consortia, such as the ENCODE project, have generated a large amount of information on the human genome [80], including information on transcriptional binding sites, histone modifications, and DNA methylation, in order to explain the influence on overall phenotype.

4. Conclusion

Technological advances are playing a crucial role in the evolution of genetic epidemiology as a discipline, as theyallow usto addressmore complex biological questions. The spread and popularization of NGS due to its reduction on the cost per sequenced base is democratizing access to these technologies, allowing researchers to continue on the path opened by previous tools, such as GWAS. This has been observed by the increasing number of research groups and publications using these technologies.

Currently, NGS has the potential to move genetic epidemiology forward, as it allows the assessment of common and rare SNPs, as well as other diverse types of genomic and epigenetic variations using a hypothesis-free whole genome analysis. The elucidation of genome variabilityforincreasing our understanding of living systemsis crucial.

Nonetheless, advances would not be possible without the appropriate mathematical algorithms to transform the sequences into meaningful information or without databases to annotate the identified variants. To fill this gap in information, large programs have been established (1000 Genomes Project consortium [81] and the NHGRI Genome Sequencing Program (GSP) [82]) to provide annotation data on the variations in the human genome.

Overall, newtechnologies such as GWAS and NGS constitute an opportunity for researchers to understand the genetic variability underlying complex phenotypes and provide unprecedented tools in their investigation.

Conflict of Interest

The authors declare that they have no competing interest.

DownLoad:

DownLoad: