1.

Introduction

The study of difference equations has long been a cornerstone in the understanding of discrete dynamical systems, with applications spanning fields as diverse as economics, biology, engineering, and time series. Since the 18th century, significant progress has been made in deriving closed-form solutions for certain classes of difference equations and systems (see [1,2]). These early contributions laid the foundation for the exploration of solvability in difference equations, a topic still prevalent in modern research. Many classical texts dedicate chapters to the solvability of these systems (see [3,4,5]), while recent advancements continue to extend the boundaries of this field (see [6,7]). Despite substantial progress, solvable difference equations remain a narrow subset of the broader spectrum of systems, prompting the use of alternative methods to analyze those that lack closed-form solutions (see [4,5,6,7,8,9,10,11,12,13]).

The quest for solvability often revolves around deriving applicable closed-form formulas, though these can sometimes become exceedingly complex. In such cases, qualitative analysis becomes a more practical approach, providing insights into the system's behavior when explicit solutions are either cumbersome or impossible to obtain (see [14]). Nevertheless, when possible, having general solution formulas for new classes of difference equations is invaluable. Many systems, including those involving nonlinearities, can often be transformed into known solvable ones, thereby inheriting their solvability properties. Notable examples include two-dimensional systems studied by Stević, where mathematical transformations were applied to render the systems solvable. For instance, in the work by Stević [15], the system of difference equations

was transformed into a more manageable form, leading to its solvability. Similarly, in another study [16], the system

was also successfully transformed into a solvable form. Additionally, in a further study [17], a similar approach was used for the system

demonstrating how transformations could simplify the system's structure and allow for deeper insights into its dynamics. These examples underscore the continued relevance of transformation techniques in the analysis of complex dynamic behaviors within difference systems. Such transformations not only render the systems solvable but also provide a clearer understanding of the underlying patterns governing their behavior.

As complex systems continue to emerge in various scientific domains, the need for robust models capable of capturing both the linear and nonlinear behaviors of these systems has grown significantly. Among the various classes of difference equations, three-dimensional systems present a particularly rich area of investigation due to their potential to model real-world phenomena where multiple interacting variables influence the system's evolution. Recent advancements in the study of three-dimensional difference equations have highlighted their critical role in understanding complex dynamical behaviors, especially in systems with nonlinear feedback and interacting variables. For instance, Elsayed et al. [18] investigated solutions for nonlinear rational systems, revealing intricate behaviors governed by recursive relations. Similarly, Khaliq et al. [19] analyzed exponential difference equations, uncovering stability properties and persistent dynamics in three-dimensional systems. Additionally, Khaliq et al. [20] investigated the boundedness nature and persistence, global and local behavior, and rate of convergence of positive solutions of a second-order system of exponential difference equations in three-dimensional systems. Some examples are provided to support their theoretical results. These studies underscore the growing importance of developing analytical and computational tools to tackle the challenges posed by such systems, particularly when closed-form solutions are not readily available. Our paper builds on these contributions by introducing a bilinear transformation approach, providing a novel perspective to study and simplify three-dimensional systems. In this paper, we focus on a specific three-dimensional system of difference equations,

where α,β,γ,δ,ε,λ,τ,σ∈R, α2+β2≠0, γ2+δ2≠0, ε2+λ2≠0, τ2+σ2≠0, and p0,q0,p−1,q−1,r0∈R. We employ advanced mathematical transformations and computational tools to unlock a deeper understanding of its dynamics. One of the critical challenges in studying such systems is the complexity of their solutions, especially when nonlinearities and feedback mechanisms are involved. To address this, we introduce a bilinear transformation that simplifies the original system, offering not only a clearer interpretation of its structure but also a more tractable equivalent two-dimensional representation. This approach allows us to focus on essential properties like stability, oscillations, and growth, and how these characteristics evolve under different parameter settings.

Our investigation reveals that this system exhibits rich dynamical behaviors, including oscillatory and stable states, which are significantly influenced by the discriminant of the quadratic polynomial that defines the system. By examining cases of repeated and distinct characteristic roots, we uncover how subtle changes in system parameters can lead to dramatically different outcomes. This insight is particularly valuable for applications in fields like neural networks, where the dynamic interactions between neurons often mirror such complex behaviors.

In the pursuit of developing an advanced model for analyzing the complex interactions within neural systems, we present a sophisticated mathematical framework that integrates recursive equations and nonlinear dynamics. This system aims to explore the evolution of neural signals over time, focusing on how different variables interact to influence overall system stability and activity patterns. By constructing a model based on three critical variables—pm, representing the first neural activity signal, qm, denoting the second neural response, and rm, reflecting the combined influence of both signals—we investigate how fluctuations in these signals impact both short-term dynamics and long-term stability. The recursive nature of the model enables us to track the dynamic behavior of these variables over time, incorporating nonlinear terms to simulate feedback loops characteristic of neural systems. Parameters of our models modulate interactions between the signals, with small constants introduced to ensure numerical stability. Through simulations under varying initial conditions, the model demonstrates how changes in parameter values can lead to markedly different outcomes, such as oscillatory or stable states.

The use of visualizations such as time series, phase diagrams, and frequency analysis complements the theoretical findings, offering insights into the system's behavior across multiple dimensions. These methods are particularly useful in identifying patterns like periodicity, bifurcations, or chaotic behavior, which are critical in understanding how neural systems respond to external stimuli and internal perturbations. This approach not only enhances our understanding of the underlying dynamics in neural networks but also provides a powerful tool for simulating real-world neural behavior. Through this analysis, we aim to bridge the gap between theoretical models and practical applications, offering insights relevant to fields such as neurobiology, artificial neural networks, and systems neuroscience.

The rest of the paper is organized as follows: In Section 2, we address the resolution of the equation system in (1.a). Section 3 focuses on simulation, providing a dynamic analysis of neural activity and system stability using recursive models. Finally, Section 4 concludes the paper with a discussion of the findings and potential directions for future research.

2.

Resolution of the equation system in (1.a)

In this section, we turn our attention to the systematic approach required to solve the difference equations outlined in (1.a), subject to specific initial conditions. Consider the sequence (pm,qm,rm) representing the solution to the system. It is important to highlight that the system may break down and lose its definition if any initial values are zero. To maintain the viability and continuity of the solution, the condition rm+1qmpm≠0 for m+1≥0 must always hold. This ensures the solution is consistent and free from singularities. From this stage onward, we adopt the assumption that r0q−kp−k≠0 for k∈{0,1}. This assumption plays a pivotal role in ensuring the continued existence of a well-defined solution. By securing the integrity of the system through these conditions, we can delve deeper into its dynamics and uncover potential behaviors that the system might exhibit over time.

To ease the analysis and provide a fresh perspective on the system (1.a), we introduce a strategic change of variables. This redefinition aims to simplify the relationships between the variables and allow for clearer insight into the nature of the system. The transformation is expressed as:

The primary objective of this redefinition is to restructure the system into a more manageable form while retaining its key characteristics. By leveraging these new variables, we simplify the interactions between components, enabling a more straightforward exploration of the system's dynamic behavior. This approach sets the stage for deriving essential properties of the model. To further this simplification, we multiply the corresponding sides of the first and second equations in (1.a), obtaining:

Simplifying further, we express the system in terms of the transformed variables:

Through this process, the original system (1.a) is recast into the following bilinear form:

This new system formulation presents a bilinear structure that simplifies its behavior and makes it easier to analyze. By adopting this approach, we can better understand the interplay between the variables ˆpm and ˆrm. Moreover, this transformation not only aids in solving the system but also provides valuable insights into the long-term dynamics. The bilinear form allows us to examine specific cases where the system may exhibit stability, periodicity, or chaotic behavior, depending on the chosen initial conditions and parameter values.

The nonlinear two-dimensional system of difference equations described in (2.b) was originally introduced by Stević and Tollu [17], who demonstrated that the system is solvable by providing a comprehensive method for determining its general solution. Their analysis reveals that certain constraints on the system's parameters are necessary to ensure its solvability. For instance, when γ=0, the first equation in the system (2.b) can be substituted into the second equation, which results in a system characterized by interlacing indices—a structure derived from a bilinear equation. This special case is solvable due to the simplified form of the system. A similar outcome is observed when τ=0, which forms a dual case to the one where γ=0. Given these observations, we will operate under the assumption that neither γ nor τ is equal to zero, ensuring the system maintains its nonlinear complexity. Furthermore, to avoid dealing with ill-defined solutions, additional conditions must be met: specifically, we assume γˆrm+δ≠0 and τˆpm+σ≠0 for all m. These conditions guarantee that the terms in the equations remain valid across iterations. With these assumptions in place, we present the following lemma, as derived from the work of Stević and Tollu [17], which lays the groundwork for further analysis of the system.

Lemma 2.1. Let α,β,γ,δ,ε,λ,τ,σ∈R, ensuring that the following condition holds: γ(α2+β2)τ(ε2+λ2)≠0. Furthermore, let μ1 and μ2 represent the roots of the quadratic polynomial:

Under these conditions, the system of difference equations given in (2.b) admits a closed-form solution. The general solution to this system can be described in two distinct cases, depending on whether the roots μ1 and μ2 coincide or differ.

i. Case 1: μ1=μ2. In the case where the polynomial Q(μ) has a repeated root μ2, the general solution to the system is given by:

This solution highlights how the behavior of the system evolves in the case of a repeated root, where the sequences (ˆpm) and (ˆrm) depend on both the initial values and the root μ2, with a linear relationship to the index m.

ii. Case 2: μ1≠μ2. When the roots μ1 and μ2 are distinct, the general solution takes a different form, reflecting the more complex interplay between the two roots:

This form of the solution captures the intricate dynamics of the system when the two roots differ. The system exhibits oscillatory or exponential behavior depending on the magnitude and sign of μ1 and μ2, reflecting the underlying complexity of the difference equations.

Remark 2.1. In certain cases, when specific relationships hold among the parameters of the system, simpler solutions can be derived. Specifically, if βγ=αδ or λτ=εσ, the system (2.b) can be significantly simplified. When βγ=αδ, the system reduces to a straightforward form where the sequences (ˆpm) and (ˆrm) stabilize to constant values. In this case, we have the following:

Similarly, if λτ=εσ, the sequences (ˆpm) and (ˆrm) are also reduced to constant expressions, but with a different set of parameters governing their values:

These simplified cases are particularly useful when certain parameter combinations lead to degeneracies in the system, enabling us to derive constant sequences for (ˆpm) and (ˆrm), which can make further analysis much more manageable.

Remark 2.2. Additionally, from the quadratic polynomial Q(μ), a necessary and sufficient condition for the system to have distinct roots μ1 and μ2 is that the following inequality holds:

This condition ensures that the system avoids critical cases where the roots μ1 and μ2 coincide, thereby maintaining the generality of the solution structure.

According to Lemma 2.1, we derive the closed-form expressions for the solutions of system (1.a). From these solutions, we can formulate the following theorem.

Theorem 2.1. Let α,β,γ,δ,ε,λ,τ,σ∈R, where the condition γ(α2+β2)τ(ε2+λ2)≠0 holds. Under these parameters, the system of difference equations presented in (2.b) admits a general closed-form solution. The solution to this system is expressed in two distinct cases, based on whether the roots μ1 and μ2 are identical or distinct.

i. Case 1: Under the assumption specified in (2.c), the general solution to the system is:

for s∈{0,1}, and m≥0.

ii. Case 2: When the assumption in (2.c) is not satisfied, the general solution becomes:

for s∈{0,1}, and m≥0.

Proof. To prove this, we first express the system (1.a) in a more suitable form by introducing the following changes of variables:

From the second equation, we find:

Substituting this result into the first equation of the system yields:

Thus, we obtain:

Finally, using the expressions from (2.d) and (2.e) in the second change of variables from (2.a), we find that rm=pm−1qm−1ˆrm for m≥0. Upon further decomposition, this results in the following expressions: r2m=p2m−1q2m−1ˆr2m and r2m+1=p2mq2mˆr2m+1 for m≥0.These equations highlight the recursive structure of the system, where each term is expressed in terms of preceding variables and parameters, reflecting the intricate interdependence of the system's components under the applied transformations. □

Remark 2.3. In our paper on the three-dimensional system of difference equations, we analyzed two main cases for the roots of the characteristic polynomial: repeated roots and distinct roots. In the case of repeated roots, the system's behavior is characterized by stability or linear growth, where the solutions depend on a linear relationship between the index m and the repeated root μ2. This type of behavior reflects simpler dynamics with a strong dependence on the system's initial values. On the other hand, in the case of distinct roots, the solutions exhibit more complex dynamics due to the interactions between the roots μ1 and μ2. In this scenario, the system may display oscillatory behavior or exponential growth, depending on the magnitude and sign of the roots. Our analysis highlights that these variations directly impact the system's stability and dynamic nature. These findings underscore the importance of the bilinear transformation in simplifying the three-dimensional system, offering a novel framework for understanding complex dynamics and interpreting diverse behaviors in differential systems.

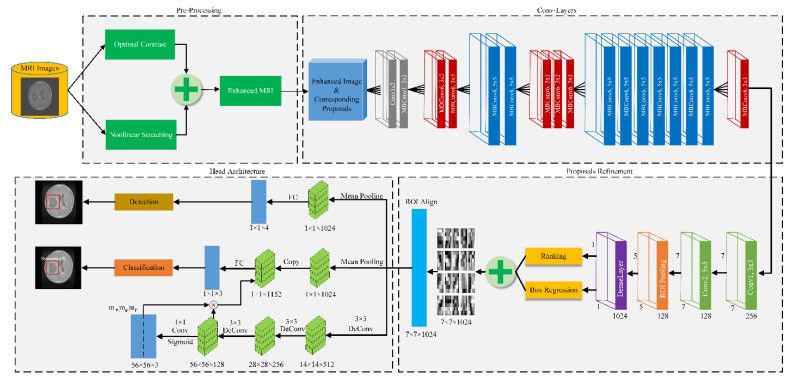

Example 2.1. In this example, we examine a system defined by three sequences: (pm), (qm), and (rm). The evolution of these sequences is governed by the following recursive equations:

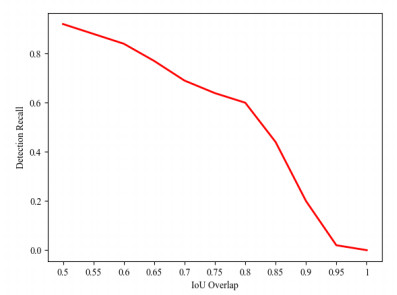

The initial values are specified as p1=0.5, p0=−0.3, q1=0.2, q0=−0.1, and r1=−0.2. To illustrate the behavior of these sequences, we plot {pm}, {qm}, and {rm} over multiple iterations, as shown in Figure 1.

In Figure 1, the plots of the sequences (pm), (qm), and (rm) display unique behaviors over several iterations. The first plot (top left) shows the evolution of pm, where the values oscillate significantly between positive and negative regions before stabilizing. In the second plot (top middle), the sequence (qm) exhibits extreme fluctuations, with values reaching very high positive and negative magnitudes. The third plot (top right) presents the sequence (rm), where the values remain close to zero, showing only moderate fluctuations. The second row of Figure 1 highlights the behavior of these sequences when observed for even and odd indices. The fourth plot displays p2m and p2m+1, revealing a slight difference between even-and odd-indexed values, though both values maintain an oscillatory pattern. The fifth plot illustrates the behavior of q2m and q2m+1, showing extreme variations, with odd-indexed values exhibiting larger magnitudes. Finally, the sixth plot presents r_{2m} and r_{2m+1} $, where the values remain relatively close to zero, with mild fluctuations between the even and odd sequences.

Example 2.2. In this example, we examine a system defined by three sequences: (pm), (qm), and (rm). The evolution of these sequences is governed by the following recursive equations:

The initial values are specified as p1=1.2, p0=1.5, q1=1.4, q0=1.5, and r1=−0.5. To illustrate the behavior of these sequences, we plot {pm}, {qm}, and {rm} over multiple iterations, as shown in Figure 2.

In Figure 2, the sequences (pm), (qm), and (rm) evolve under different recursive equations and initial conditions. The first plot (top left) shows the sequence (pm), which displays a smooth and continuous increase without noticeable oscillations. The second plot (top middle) illustrates the sequence (qm), where the values rise sharply with each iteration, reaching progressively higher magnitudes. Similarly, the third plot (top right) presents the sequence (rm), which follows a comparable pattern to qm, with steadily increasing values over time. The second row of Figure 2 highlights the behavior of these sequences for even and odd indices. The fourth plot shows p2m and p2m+1, where there is a slight divergence between even-and odd-indexed values, though both follow a smooth and increasing trend. The fifth plot depicts q2m and q2m+1, revealing substantial growth, with larger values observed for the odd indices. Finally, the sixth plot illustrates the sequences (r2m) and (r2m+1), both of which follow a similar upward trend, with odd-indexed values growing more rapidly.

3.

Simulation: dynamic analysis of neural activity and system stability using recursive models

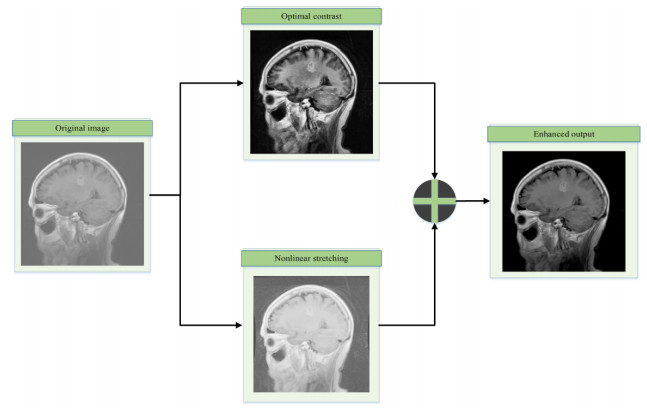

In the pursuit of developing an advanced model for analyzing the complex interactions within neural systems, we present a sophisticated mathematical framework that integrates recursive equations with nonlinear dynamics. This system aims to explore the evolution of neural signals over time, focusing on how various variables interact to influence overall system stability and activity patterns. The model is designed to investigate how fluctuations in neural activity impact both short-term dynamics and long-term stability under varying conditions.

The system revolves around three critical variables: pm, representing the first neural activity signal; qm, denoting the second neural response; and rm, which reflects the combined influence of both neural signals on system stability. These variables evolve based on several key parameters: α, which affects the influence of the external signal on the first neural activity, is set at 0.7; β, which modulates the interaction between the neural signals, is set at 0.5; γ, impacting the internal feedback on the second neural response, is 0.4; and δ, governing the influence of the first neural signal on overall system dynamics, is set at 0.6. Additional parameters, such as ε and λ, further regulate complex interdependencies within the system.

The model employs recursive iterations to track the dynamic behavior of these variables over time, carefully incorporating nonlinear terms to capture the complex feedback loops characteristic of neural systems. Small constants are introduced to avoid singularities and ensure numerical stability. Various plots, including time series of neural activity, phase diagrams, and heatmaps of signal distributions, are used to visualize how changes in system parameters affect overall behavior. The analysis also includes frequency domain investigations and topological maps to illustrate connectivity and functional relationships within the neural network.

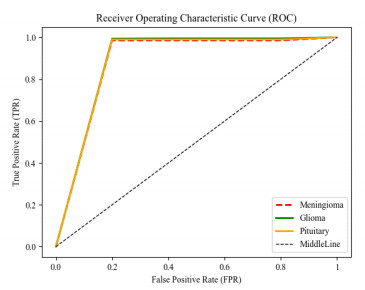

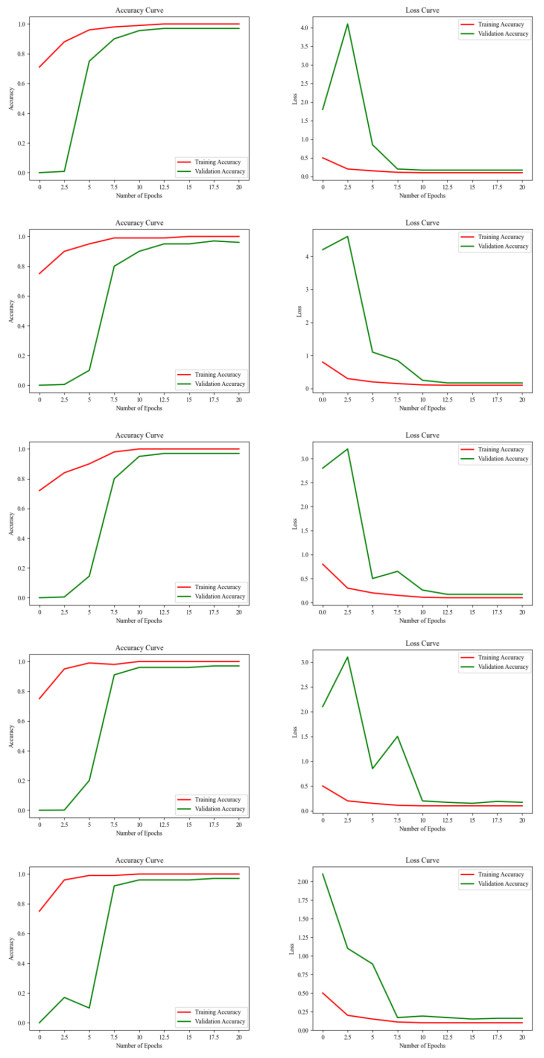

Figure 3 presents the time series of the three primary variables pm, qm, and rm, which represent different aspects of neural activity. Each variable is tracked over time, providing an initial understanding of how the system evolves. The time series reveals fluctuations, possible stability points, or oscillations in the variables, which can indicate periodic behavior or instability in the neural system. By visualizing the individual trajectories of these variables, we obtain a clearer understanding of their temporal evolution. However, while this plot offers insight into the independent dynamics of each variable, it does not reveal how these variables interact with one another. To gain a deeper understanding of the relationships between these variables, we transition to the phase plot in Figure 4, which allows for the exploration of the dynamic interplay between pm and qm.

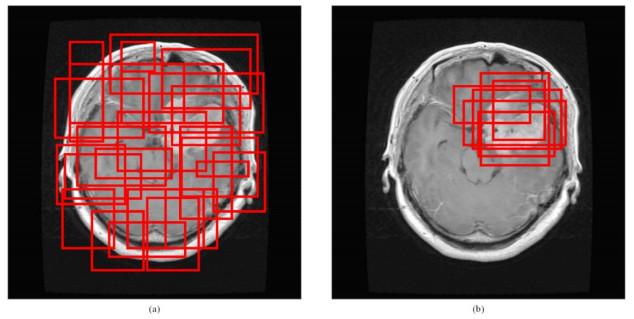

The phase plot in Figure 4 illustrates the dynamic relationship between pm and qm by plotting their values against each other in a two-dimensional space. This type of plot provides a clear view of how these two variables interact over time, revealing potential cycles, attractors, or chaotic behavior. Phase plots are particularly useful for identifying recurring patterns that may not be visible in the time series data. By examining this plot, we can discern whether the system exhibits periodic behavior, remains stable, or shows signs of chaos. The next logical step is to examine the frequency components of these variables, as understanding the oscillations at different frequencies provides further insight into the system's behavior. This leads us to the frequency analysis in Figure 5.

Figure 5 shows the frequency analysis of the variables pm and qm using Fourier transforms. This analysis decomposes the time-domain signals into their frequency components, highlighting the dominant frequencies or oscillatory patterns that govern the system's dynamics. Peaks in the frequency spectrum indicate the presence of strong periodic components, while a spread of frequencies may point to chaotic or broadband behavior. This frequency analysis complements the time series and phase plot by identifying the key frequencies at which the system oscillates. By isolating these frequencies, we can better understand the neural system's stability and rhythmic behavior. Having examined both the time-domain and frequency-domain characteristics, the next step is to explore how the system responds to external stimuli, as illustrated in Figure 6.

Figure 6 examines the system's response to external neural stimuli, illustrating how the variables pm, qm, and rm react when subjected to an external perturbation. The response graph emphasizes the system's adaptability, illustrating how quickly and effectively the neural signals stabilize or oscillate following a stimulus. Understanding the system's responsiveness is essential for evaluating its robustness and ability to maintain stability under varying conditions. This analysis identifies whether the system can recover from disturbances or if it transitions into a new behavioral state. To further investigate the system's internal structure, we now proceed to Figure 7, which depicts the functional connectivity within the system.

Figure 7 illustrates a functional connectivity map, depicting the relationships and interactions between different components of the neural system. Each node in the map represents a variable, and the edges indicate the strength and direction of their interactions. This visualization helps uncover how the neural variables influence each other, highlighting key nodes that drive system behavior. By examining this map, we can identify densely connected regions within the system, which may be critical for maintaining overall stability or contributing to instability. The next figure builds upon this analysis by investigating the structural organization of the neural system using topological maps.

Figure 8 presents a topological brain map, a spatial representation of the neural network's structure. The topological map demonstrates how neural components are organized and interconnected, providing insights into the system's overall architecture. Clusters of nodes or regions with strong connectivity highlight functional modules that contribute to specific behaviors or functions. Through topological analysis, we gain a better understanding of the neural system's resilience and efficiency in processing information. By visualizing the layout and connections, we develop a deeper appreciation of how the network is structured to support stability and adaptability. From here, we progress to Figure 9, which offers a detailed view of the neural network graph, elaborating on its structural complexity.

Figure 9 illustrates the neural network graph, depicting the detailed interconnections between nodes in the system. The graph emphasizes the relationships between individual neural components, showing how they are linked to form a cohesive network. This representation is critical for understanding how information is transmitted through the network and how the overall system behaves under different conditions. By analyzing the graph, we can pinpoint key nodes and pathways that play a significant role in determining the system's overall stability and performance. The structural insights gained here are further enhanced by the following figure, which provides a three-dimensional visualization of neural activity across time and space.

In Figure 10, we see a three-dimensional visualization of neural activity across the three key variables pm, qm, and rm. This plot offers a spatial and temporal overview of how these variables evolve together, providing a richer, more dynamic understanding of the system's behavior. The 3D perspective allows for the identification of complex patterns, such as oscillations or chaotic behavior, that may not be easily observed in two-dimensional plots. The 3D plot in Figure 10 provides an overall impression of how the variables interact in space and time, but it may overlook finer details about how each variable, such as pm, behaves over specific ranges or regions. The transition to the heatmap facilitates a clearer, more focused visualization of pm alone, highlighting areas of concentration, clustering, or anomalies that may not be obvious in a 3D plot.

Figure 11 displays a heatmap of the pm values, offering a visual representation of the distribution and variation of pm across the simulation. The heatmap uses color gradients to indicate the intensity of pm at different points in the data, enabling us to quickly identify regions of high or low activity. Areas with more intense colors correspond to higher values of pm, while lighter areas indicate lower values. Heatmaps are particularly useful for detecting spatial patterns or clusters of activity within the data that might not be evident from the time series or phase plots. This visualization provides a more comprehensive view of the data by mapping the values onto a two-dimensional grid, making it easier to spot regions of interest or anomalies in the system's behavior.

Analysis of the simulation results clearly reveals a strong agreement with theoretical predictions, significantly enhancing the model's credibility and robustness in representing the complex dynamics of neural systems. Overall, the results demonstrate the model's ability to provide accurate predictions about the interactions between the key variables pm, qm, and rm, while explaining the periodic fluctuations and chaotic behaviors that are an essential part of neural systems. These results are consistent with the scenarios assumed in the theoretical design, thus supporting the theory on which the model is based. In terms of temporal analysis, the graphs show that the system exhibits periodic behavior under certain conditions, with the variables moving between relative stability and mild fluctuations, aligning with theoretical expectations about the stability of neural systems under normal conditions. The phase diagrams also show the presence of periodic attractors, which supports the hypothesis that the system can reach a state of dynamic equilibrium, where the variables interact in a balanced manner over time. These results represent an important step toward verifying the validity of the theoretical framework on which the model is based. On the other hand, in cases where radical parameter changes were introduced (such as modifying the parameters α, β, γ, and δ), unexpected behaviors appeared, indicating that the patterns evolved into chaotic behaviors or that new attractors appeared. This reflects the complexity of the system, as these cases show that the system does not behave in a stable manner under all conditions. Such sudden changes in behavior highlight the system's flexibility and its responsiveness to parameter variations, which calls for further analysis to understand the exact mechanisms leading to these unexpected behaviors. Regarding the frequency analysis, the results of the frequency analysis using Fourier transforms showed that the system is characterized by strong peaks at certain frequencies, while inhomogeneous frequency components appear in chaotic cases. This suggests that the dominant frequencies can provide stable signals under certain conditions, while frequency dispersion appears in cases of disorder or chaos, which helps in determining the behavior of the system in response to parameter changes.

Overall, this analysis underscores the strength of the model in simulating neural systems across a wide range of different scenarios, which enhances the credibility of the research. The model not only simulates the expected interactions but also uncovers new possibilities in representing unexpected patterns or chaotic behaviors, thereby opening up new avenues for understanding complex neural systems. These unexpected behaviors may prompt us to consider more complex models or include additional factors that may contribute to distinguishing these phenomena. In addition, this analysis demonstrates the flexibility and adaptability of the model to changes, strengthening its position as an effective tool for analyzing neural systems under a variety of conditions. These results open new avenues for future research on how to manage stability in neural systems under changing influences, and may help in designing strategies to predict or control the behavior of complex neural systems in medical applications or advanced scientific research.

4.

Conclusions

In this paper, we thoroughly analyzed the system of difference equations represented by (2.b), aiming to gain a deeper understanding of its dynamic properties through advanced mathematical transformations and computational techniques. The successful application of a transformation that simplified the original system (1.a) into a bilinear form (2.b) was pivotal. This approach not only clarified the system's underlying behavior but also facilitated the direct derivation of key properties. By transforming the system, we obtained a clearer perspective on its dynamics, allowing for a more comprehensive exploration of its structural features.

Our analysis, outlined in Lemma 2.1, revealed that the system of difference equations possesses a closed-form solution under the condition γ(α2+β2)τ(ε2+λ2)≠0. This result is crucial, as it ensures the system's solvability while shedding light on the relationship between the solutions and the parameters defined by the discriminant of the quadratic polynomial Q(μ). By distinguishing between cases with repeated and distinct roots of the characteristic polynomial, we observed two distinctly different dynamical behaviors. In Case 1, where the roots are repeated, the solutions were characterized by simpler linear dependencies on the index m. On the other hand, Case 2, with distinct roots, showcased more complex dynamics, including oscillatory and exponential behaviors. This differentiation is significant as it underscores how varying parameter values can drastically influence the system's evolution, leading to different qualitative behaviors.

The simulations performed under diverse initial conditions and parameter values further demonstrated the model's versatility in capturing a wide range of dynamical behaviors such as oscillations, stability, and growth patterns. These findings highlight the model's potential for real-world applications, especially in the analysis of neural system dynamics, population models, and other complex interactions. The ability of the model to adapt to various dynamic regimes makes it a valuable tool for practical implementation in a range of scientific fields.

The results of this paper offer several exciting avenues for future research. Expanding the model to higher-dimensional systems is a logical next step, as it could provide a more comprehensive understanding of multi-variable dynamic interactions. Additionally, the incorporation of additional nonlinearities would further enhance the realism of the model, allowing it to capture more intricate behaviors. Another promising direction is optimizing computational methods to handle singularities, ensuring greater efficiency in solving more complex systems. By refining these aspects, future research could build on this foundational work, offering deeper insights into the behavior of dynamical systems and broadening the scope of applications in fields such as neural science, population biology, and ecological modeling.

In conclusion, this paper makes significant contributions to the understanding of systems of difference equations and their dynamic behaviors, while also paving the way for further advancements in both theoretical analysis and practical applications. The proposed directions for future research will undoubtedly enrich the field, providing new perspectives and opportunities for further exploration.

Use of Generative-AI tools declaration

The author declares he has not used Artificial Intelligence (AI) tools in the creation of this article.

Conflict of interest

The author declares no conflicts of interest in this paper.

DownLoad:

DownLoad: