1.

Introduction

Many phenomena in nature can be mathematically modeled by means of partial differential equations (PDEs) to approximate the underlying physical principles governing these phenomena. The advection–diffusion–reaction (ADR) equation can describe the spatio-temporal evolution of a physical quantity in a flowing medium, such as water or air [1]. This evolution expresses different physical phenomena: The transport of the physical quantity driven by the advection velocity field of the flowing medium, its diffusion from highly dense areas to less dense areas, and the reaction with other components, represented by source terms. The ADR equation plays an important role in many research fields, such as heat conduction [2], transport of particles [3], chemical reactions [4], wildfire propagation [5], etc.

In most cases, there are no analytical solutions for these PDEs, so they have to be solved by means of numerical methods. The finite volume (FV) method [6,7] is based on the direct discretization of the integral form of the conservation laws, and this form does not require the fluxes to be continuous. The FV method being closer to the physical flow conservation laws is the reason why it is very useful when solving equations like the ADR equation. In this article, the FV method-based first-order upwind (FOU) scheme is used to discretize the information of the conservation laws by assuming a piecewise constant distribution of the conserved variables within computational cells [8,9].

The large number and diversity of problems, together with the vast spatial domains considered in realistic scenarios requiring an improvement in computational cost, has led, in recent years, to the development of a wide range of mathematical strategies and tools in the scientific literature to facilitate, improve, and increase the calculation capacity of the classical methods used in the framework of fluid mechanics. Among many others, reduced-order modeling is one of the most popular in the field. It was originally developed as the reduced basis strategy for predicting the nonlinear static response of structures [10]. Intrusive reduced–order models (ROMs) based on proper orthogonal decomposition (POD) [11] are one of the most interesting tools in fluid mechanics [12]. Intrusive ROMs are intended to be alternative numerical schemes to replace the calculations performed by classical schemes, also called full-order models (FOMs), to save computational costs without losing accuracy in the solutions. These ROMs reside in a reduced dimensional space much smaller than the physical space, which is the reason why they are more efficient than FOMs [13].

The correct resolution of PDEs by means of numerical schemes requires a thorough calibration of the parameters on which the mathematical model depends. It is therefore of great interest to try to find alternatives that help in this calibration objective in parametrized problems [14]. In this sense, ROMs can be useful for performing multiple simulations in a less expensive way to map different values of the input parameter. ROMs are data-driven methods, which means that they need a training phase prior to their resolution. Training snapshots impose some computational limits on the parameters that define the problem (such as the final time [15] or the initial condition of the advection velocity) that ROMs cannot exceed when computing their solutions. However, it is highly interesting to study what can be done to overcome these limits by means of ROMs.

The aim of this article was to solve the advection–diffusion–reaction equation by means of parametrized ROMs to obtain solutions with parameter values that do not belong to the training set. There are different strategies available in the literature, such as those based on the reduced-basis methods [16,17], interpolation in matrix manifolds [18], the shifted POD method [19], the extrapolation technique carried out in [20], the transformed modes used in [21] and proposed in [22,23], or non-intrusive ROMs such as autoencoders and neural networks [24,25,26]. The technique used in this article is based on generating a multiple training sample from arbitrary values of the input parameters (training values), as done in [27]. The ROM is then solved for some values of interest of the input parameters (target values).

The novelty of this paper consists of the detailed analysis of the constitution of the training sample, taking into account the particular needs of each parameter studied. To do this, it is necessary to find out what size the training sample should be for the ROM to be sufficiently accurate, whether a minimum number of samples is required or even if only one sample is needed, and to check what the relationship is between the ROM's configuration parameters and the number of training samples. This part of the study is done by applying the parametrized ROM strategy to the two–dimensional (2D) ADR equation, whose advection velocity and the diffusion coefficient have been considered as input parameters, as well as parameters that define the initial condition (IC). The test cases solved are designed to evaluate the prediction of ROMs with all possible input parameters when considering the 2D ADR equation.

Within the framework of climate change, forest fires will become more frequent in the following years, with devastating impacts on society [28]. To minimize their risk, mathematical models of forest fire propagation have been developed to predict the evolution of a fire to adequately plan suppression actions, improve the safety of firefighting brigades, and reduce fire damage. Considering all the conclusions obtained via the 2D ADR equation, the parametrized ROM strategy is extended to the 2D wildfire propagation (WP) model [5,29,30], which governs the spatio-temporal evolution of fire in a wildfire and whose resolution in the reduced space is similar to that of the ADR equation.

The remainder of the article is organized as follows. Section 2 introduces the 2D ADR equation and the 2D WP model and their discretization using the FV method. Section 3 outlines the POD-based ROM applied to both equations and the modification of the ROM strategy to approach parametrized problems. Section 4 presents seven numerical cases in which the ROM is solved for different input parameters. Finally, the concluding remarks are given in Section 5.

2.

The problem under study and the full-order model

2.1. The 2D advection–diffusion–reaction equation

The 2D advection–diffusion–reaction (ADR) equation is defined as follows:

where u=u(x,y,t) is the conserved variable, a=(ax,ay) is the advection velocity, ν≥0 is the diffusion coefficient, and r is the reaction coefficient. To find u(x,y,t) in the domain (x,y,t)∈[0,Lx]×[0,Ly]×[0,T], Equation (2.1) has to be solved, subject to appropiate initial conditions (ICs) and boundary conditions (BCs).

Equation (2.1) can be numerically approximated by means of the FV method [31,32]. The computational domain is discretized using rectangular volume cells with a uniform width Δx×Δy, where the position of the center of i-th cell is (xi,yj), with xi=iΔx and yj=jΔy, and i=1,...,Ix and j=1,...,Iy, where Ix and Iy are the number of volume cells in the x- and the y-directions, respectively. The subsets JB and JI contain a pair of indices (i,j) indicating the cells that belong to the boundary and to the interior of the domain, respectively, with J=JB∪JI, where J={(i,j),1≤i≤Ix,1≤j≤Iy} is the total set of indices.

Regarding the time discretization, the time step Δt=tn+1−tn with n=0,...,NT−1, where NT is the total number of time steps, is selected dynamically using the Courant–Friedrichs–Lewy (CFL) condition [33]

with 0<CFL≤1.

By means of the FV method, the discretised variables are integrated inside the volume cell (xi−1/2,xi+1/2)×(yj−1/2,yj+1/2) with (i,j)∈J in the time interval (tn,tn+1). The numerical fluxes are reconstructed using the first-order upwind method [34,35], and the diffusion term is discretized using central differences, so that the final full-order model (FOM) of the 2D ADR equation is

where uni,j≈u(xi,yj,tn) is the average value over the cell (xi−1/2,xi+1/2)×(yi−1/2,yi+1/2) at tn, with (i,j)∈JI.

All the variables of interest belonging to the volume cells inside the domain, (i,j)∈JI are integrated in time, following the same updating relation indicated in (2.3). However, those on the boundary, (i,j)∈JB, require a special treatment that takes into account the boundary conditions imposed on them. Two examples of free boundary conditions are given below for a point placed at the corner (0,0) and for a point placed at the boundary y=0 of the rectangular domain, respectively

with 2≤i≤Ix−1.

2.2. The 2D wildfire propagation model

The wildfire propagation (WP) model is an application case of the ADR equation. Physics-based wildfire propagation models [5,29,30] are usually defined in two horizontal spatial dimensions, as an approach to predict the evolution of the fire on the Earth's surface and require the spatial distribution and characteristics of the biomass [28] as input parameters.

In the WP model, the energy within the fire is represented by the temperature T=T(x,y,t)>0. According to this model, the two-dimensional finite layer of the ground is composed of fuel, represented by the presence of biomass Y=Y(x,y,t)∈[0,1], which sustains the evolution of the fire. The WP model, as proposed in [28], is

where the rate of variation of the mass fraction of fuel is given by the Arrhenius law [36] as follows:

The heat is generated by the burning reaction of the available fuel in the coupling term, it is transported by the advection term and diffused by the diffusion term, and it is lost to the atmosphere through the cooling (reaction) term.

The parameters of this model are v=(vx,vy) is the advection velocity, which is constant; k is the thermal conductivity; ρ is the density of the medium; cp is the specific heat; T∞ is the ambient temperature; H is the fuel combustion heat; A is the pre-exponential factor; and Tpc is the ignition temperature. It should be mentioned that all the physical variables that appear in the WP model are in units of the International System. They have been omitted in all cases for the sake of simplicity.

Following the FOU-based FV method, the variables are integrated into each of the volume cells, and the WP model is discretized as follows [37]:

where Tnij≈T(xi,yj,tn) and Ynij≈Y(xi,yj,tn) are the average values over the cell (xi−1/2,xi+1/2)×(yj−1/2,yj+1/2) at tn, with (i,j)∈JI. The discretization of all the boundary conditions are similar to the linear case in (2.4). The time step is selected dynamically using the CFL condition in (2.2).

3.

Reduced-order model

In this section, the parametrized training methodology used to predict solutions with the ROM for the values of the parameters of study that were different from those of the training set is presented, together with the standard strategy and the ROMs based on the 2D ADR equation (2.1) and the 2D WP model (2.5).

3.1. Standard ROM strategy

The reduced-order modeling strategy consists of two phases: (1) offline phase, in which the ROM is trained, and (2) online phase, in which the ROM is solved. This resolution in time justifies its use, since it accelerates the required computation time with respect to that of the FOM by several orders of magnitude.

A set of NT-time numerical solutions computed by the FOM, or training solutions, are assembled in the so-called snapshot matrix

with

The proper orthogonal decomposition (POD) [38] of U by means of the singular value decomposition (SVD) [39] decomposes the snapshot matrix into orthogonal components, also called POD modes

where Σ∈RIxIy×NT is a diagonal matrix in which the entries of the main diagonal are the singular values of U and represent the magnitude of each POD mode; Φ∈RIxIy×IxIy and Ψ∈RNT×NT are orthogonal matrices. The matrix Φ=(ϕ1,…,ϕIxIy)∈RIx×Iy with ϕk=(ϕ1,k,…,ϕIxIy,k)T consists of the orthogonal eigenvectors of UUT, which are used to define the reduced space.

Let MPOD be a positive integer such that MPOD≪min(IxIy,NT). It will be chosen to be as small as possible without significantly affecting the accuracy of the solution computed via with the reduced-order method [12]. The number of POD modes solved by the ROM in the test cases proposed in this paper has been obtained a posteriori by analyzing the efficiency obtained. In the following sections, Case 4 will illustrate this procedure.

3.2. Parametrized training methodology

The standard strategy used to train the ROM presented in the previous secion needs to be modified to handle the prediction of solutions for different values from those of the input parameters used to train the ROM, called the parameters of study, which are denoted by μm, with m=1,...,Mtrain.

For each solution that is calculated with the FOM for a set of training parameters, also called the training sample, a snapshot matrix is generated U(μm). All the training sub-matrices are assembled together into one common snapshot matrix, also called the training set. This can be done by easily placing one sub-matrix after another

where Mtrain is the number of snapshot sub-matrices of the training set. The SVD is applied without special treatment to obtain the reduced basis and obtains the basis functions that define the reduced space for a general value of the parameters. Once the reduced space has been defined, the ROM is solved for the new value of the parameters, namely the test value μT. In this paper, the benefits and limitations of this methodology are studied.

In order to extend the ROM strategy to parametrized nonlinear problems, such as the WP model (2.5), it is necessary to combine this modified method with the proper interval decomposition (PID) [20]. For this purpose, it is necessary to group all the snapshots generated in the different training samples according to the defined time windows. The SVD is applied to each time window to obtain the different reduced spaces. For the sake of simplicity, in this paper, both the training set samples and the solution predicted by the ROM at the same time instants will be calculated.

In the following subsections, the ROMs of the 2D ADR equation (2.1) and the 2D WP model (2.5) are presented.

3.3. The 2D ADR-based ROM

Intrusive ROMs based on the POD method are alternative numerical schemes that need to be developed from a standard numerical scheme by projecting it from the physical space to the reduced space. The Galerkin method [40] acts as the projection between these two spaces

where ˆunk with k=1,...,MPOD is the reduced variable that depend on time, with (i,j)∈J.

The 2D ADR-based ROM is developed by applying the Galerkin method (3.3) to the 2D ADR-based FOM (2.3) and projecting it to the reduced space

where the coefficients are

where the boundary coefficients bi,j are given by the BCs considered. An example of free BCs is

3.4. The 2D WP-based ROM

Regarding the WP model, the Galerkin method reduces both variables

where the temperature needs to normalized to avoid loss of accuracy in the ROM calculation.

The ROM based on the 2D WP model (2.5) is obtained by applying the Galerkin method (3.7) to the FOM of Eq (2.8)

where the coefficients are

The boundary terms bi,j are computed in a similar manner to the linear case (3.6).

The rate variation of the mass fraction (2.7) is nonlinear, so its reduction is not straightforward. In this paper, the reduced version of the mass fraction Ψ(Tni,j) is carried out by defining different time windows to renew the POD basis

The ROM is linearized inside each time window, because this function is computed in the off-line phase within each time window by using time-averaged temperatures as follows:

where (T′ni,j)Trainm are the training samples, with Mtrain being the total number of samples computed by the the FOM that comprises the training set, and with MSTW being the number of snapshots included in each time window. The number of time windows is selected a posteriori in the numerical cases solved in this paper to improve efficiency.

Note that the reduced equation for updating ˆY does not depend on the values of ˆT computed during the online phase and is therefore decoupled from the first equation. In the reduced temperature update equation, the biomass coupling term is precomputed in the coefficients. Because of this, a reduced ADR equation similar to (3.4) is solved at each time level.

4.

Numerical results

This section presents the numerical results obtained by applying the parameterized ROM strategy to solve the 2D ADR equation and the 2D WP model.

4.1. Parametrized 2D ADR equation

First, the 2D ADR equation is used to study the limitations of the proposed methodology when the value of parameters such as the transport velocity, diffusion coefficient, initial conditions, or ROM the parameters are modified.

Case 1. Parameter: Diffusion coefficient

The parameter studied in this case is the diffusion coefficient μ=ν. Since this parameter is scalar, a one-dimensional problem will be worked with for the sake of simplicity. The time-space domain of the case is defined as (x,y,t)∈[0,20]×[0,1]×[0,40]. The initial condition (IC) is defined as the following Gaussian profile

Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=200×1 volume cells and CFL=0.9. The advection velocity is set to zero, ax=ay=0, and the diffusion coefficient is given two different values to generate the training samples. These values, indicated by ν1 and ν2, are shown in Table 1, together with the target value νT.

All the settings of the problem are shown in Table 2.

Three different subcases have been solved with the ROM. For each of these, the training set has been constructed with different samples. As indicated in Table 3, in Subcase 1, the ROM has been trained with ν1; in Subcase 2, with ν2; and in Subcase 3, with both values ν1 and ν2. The ROM has been solved in all subcases using five POD modes, since this number achieves a good compromise between the accuracy of the solution obtained by the ROM and the central processing unit (CPU) time required to compute it. Later, in Case 4, it is analyzed how the solutions are modified if the value of this parameter is changed.

The accuracy obtained by the solutions uROM calculated by the ROM is measured by means of the differences with respect to the solutions uFOM calculated by the FOM using the L1 norm

These differences can be computed at each time step, so the time evolution of the errors can be visualized. The differences ‖d‖1 computed in this case are shown in Table 3. These results are shown in Figure 1, where the IC is represented by the black line, the ROM solution with the red line, the test solution with the blue line, and the training solution with the gray line. As can be seen from these representations, all three subcases show high accuracies. This means that the ROM is able to predict solutions for larger values of the diffusion coefficient than the one it has been trained with, as shown in Subcase 1. However, as indicated in Subcase 2, using larger values of this parameter in the training allows to improve the accuracy by three orders of magnitude. Conversely, increasing the training set with more samples does not improve the accuracy, as reported in Subcase 3.

It is also possible to study the speed up achieved by the ROM by means of the required CPU time, τROMCPU, and that of the FOM, τFOMCPU, both measured in seconds. The CPU times required by the FOM to compute the test solution and the by ROM to compute the target solutions are τFOMCPU=1.00⋅10−2 and τROMCPU=2.61⋅10−4, respectively, so the speed up achieved by the ROM is ×39.

Case 2. Parameter: Advection velocity

The parameter studied in this case is the advection velocity in the x direction μ=ax (with ay=0). In this case, only one component of the velocity is used for the sake of simplicity. Later, the two-dimensional character of the velocity field is studied. The time-space domain of the case is defined as (x,t)∈[0,20]×[0,10]. The IC is defined as the following Gaussian profile:

Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=200×1 volume cells and CFL=0.9. The diffusion coefficient is set as ν=0.01, and the advection velocity in the x direction is given six different values to generate the training samples. These values are shown in Table 4, together with the target value (ax)T.

All the settings of the problem are shown in Table 5.

The ROM has been solved 22 different times, corresponding to the 22 subcases indicated in Figure 2. In each of these subcases, the training set consists of different combinations of the six samples for different values of the parameter of interest ax listed in Table 5. In the first six subcases, the ROM has been trained with a single sample, choosing a different one in each subcase; while in the rest, at least two samples are used. For example, in Subcase 5, the ROM has been trained using just Sample 5 ((ax)5=0.8); in Subcase 16, the ROM has been trained using Samples 2 and 5 ((ax)2=0.2 and (ax)5=0.8). Through this exploration of the number of samples and which ones in particular, the most appropriate composition of the parametric training set has been studied to predict the target solution with (ax)T=0.5. In all subcases, the ROM has been solved using five POD modes.

The differences between the ROM and FOM solutions ‖d‖1 are presented in Figure 3. The main conclusion that can be drawn from these results is that it is necessary to train the ROM with a solution obtained with a higher advection velocity than the target. In other words, it is necessary to use a solution that has gone further in space. In addition to this, the accuracy of the solution predicted by the ROM will be higher the closer the training value is to the target. If it gets further away towards higher values, the accuracy drops, although it still works. This is clearly shown in the single-trained Subcases 4–6, as Subcase 4 has the best accuracy, and Subcases 5 and 6 worsen with respect to Subcase 4, but remain below Subcases 1–3. The ROM is able to accurately predict the time evolution of the initial Gaussian profile in Subcases 4–6, as shown in Figure 4d–f, whereas in Subcases 1–3, the ROM changes the shape of the Gaussian profile, as shown in Figure 4a–c.

In Figure 3, of all the subcases, the one showing the smallest differences is Subcase 4, where the training set is composed of a single sample. However, the training sets are rarely composed of only one solution and, in addition, the possible lack of knowledge of their composition may mean that they are not sufficiently close. Thus, it is necessary to consider sets composed of several samples, as is done in Subcases 7–22. In general, it has been found that the subcases with the smallest differences are those containing Training Sample 4, such as Subcases 9, 10, 17, 19, 21, and 22. In these subcases, the differences obtained are above those of Subcase 4, but they are still good levels of error, as can be seen in the solutions shown in Figure 4g–l. In these subcases, the solutions predicted by the ROM are practically identical to the test solutions. From this, it can be deduced that as long as the differences are below a value of approximately 0.15, the ROM solution will be good. This value of ‖d‖T1=1.5⋅10−1 will be used as a threshold for selecting solutions with acceptable accuracy. This value has been included in the figure by means of a horizontal dashed line, according to which, Subcases 4, 9, 17, 19, and 21 would be accepted.

The CPU times required by the FOM and the ROM are τFOMCPU=1.08⋅10−3 and τROMCPU=1.93⋅10−5, respectively, so the speed up is ×56.

Case 3. Parameter: Initial Gaussian profile

This test case is designed to study the ability of the ROM to predict solutions when the coefficients defining the IC act as input parameters. Since a detailed analysis of the elements that define the IC is carried out, in this case a one-dimensional case is used. The time-space domain of the case is defined as (x,y,t)∈[0,20]×[0,1]×[0,10]. A Gaussian profile is defined as the IC

where the four coefficients ˉu, u0, c, and x0 are treated as input parameters. Each of these parameters is studied separately to see how they affect the ROM's predictions. They are given six different values structured around the target value, so that six training samples are considered to build the training set according to the distribution of subcases indicated in Figure 5.

Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=200×1 volume cells and CFL=0.9. The diffusion coefficient is set to ν=0.01, and the advection velocity is set to a=(ax,ay)=(0.5,0). All the settings of the problem are kept fixed in all the following different cases and are shown in Table 6.

Parameter of study: ˉu

First, the offset of the initial Gaussian profile ˉu is considered as the parameter of study and given the values indicated in Table 7. The target value is also included.

The rest of the coefficients defining the initial Gaussian profile are given the following values,

The ROM has been solved using MPOD=6 POD modes in all subcases.

The differences ‖d‖1 of all subcases are shown in Figure 6. In all the single-trained subcases, the ROM obtains very high differences, even in Subcases 3 and 4, where the training values of ˉu are the closest to the target value. The solutions computed by the ROM are not able to accurately predict the final Gaussian profile of the test solution, as indicated in Figure 7a–f. This is due to the fact that the initial Gaussian profile, when projected into the reduced space (using a small number of POD modes), is deformed and loses accuracy. Therefore, when it is transported in space, it maintains this deformation. This is illustrated by Figure 7g–l, in which the IC is represented together with a reprojection of space of its projection to the reduced space, i.e., ϕϕTu(x,0).

However, in subcases where the training set is composed of two or more samples, whatever they are, the ROM is able to predict solutions with high accuracy. Thus, Subcases 7–22 show similar differences that are below the acceptance threshold ‖d‖T1, as shown in Figure 6. In short, the offset of the initial Gaussian profile ˉu is a very malleable parameter that simply requires a training set with several samples and can be given arbitrary values.

The CPU times required by the FOM and the ROM are τFOMCPU=9.05⋅10−4 and τROMCPU=1.94⋅10−5, respectively, so the speed up is ×47.

Parameter of study: u0

Second, the amplitude of the initial Gaussian profile u0 is considered as the parameter of study and given the values indicated in Table 8.

The rest of the coefficients defining the initial Gaussian profile are given the following values

The ROM has been solved using MPOD=5 POD modes.

Similar to what has been observed with the offset coefficient ˉu, the ROM needs two or more samples in the training set when predicting solutions for different values of the amplitude of the initial Gaussian profile. This is shown in Figure 8, where Subcases 7–22 present similar differences below the threshold difference ‖d‖T1.

The CPU times required by the FOM and the ROM are τFOMCPU=8.88⋅10−4 and τROMCPU=1.42⋅10−5, respectively, so the speed up is ×63.

Parameter of study: c

Third, the width of the initial Gaussian profile c is considered as the parameter of study and given the values indicated in Table 9.

The rest of the coefficients defining the initial Gaussian profile are given the following values:

The ROM has been solved using MPOD=8 POD modes.

The width of the initial Gaussian profile is a more complicated parameter, since most of the subcases show higher differences than the threshold difference ‖d‖T1, as shown in Figure 9. Only in Subcases 12, 17, 20, 21, and 22, the ROM is able to predict accurate solutions, as shown in Figure 10. What these subcases have in common is the presence of Sample 3 in their training set. This sample has been computed with the value of c that is the closest below the target value, which is the initial Gaussian profile of width that is the closest above the target. In the subcases in which the training set is composed of several samples (including Sample 3), the differences are also decreased, as indicated in Subcases 20–22. It can therefore be concluded that the ROM needs to be trained with several samples that include wider profiles.

In addition to this, with the previous parameters, only six and five POD modes have been enough to achieve good predictions, but when considering the width of the Gaussian profile, it is necessary to increase the number of POD modes to eight to obtain satisfactory differences.

The CPU times required by the FOM and the ROM are τFOMCPU=8.86⋅10−4 and τROMCPU=3.09⋅10−5, respectively, so the speed up is ×29.

Parameter of study: x0

Lastly, the position of the initial Gaussian profile x0 is considered as the parameter of study and given the values indicated in Table 10.

The rest of the coefficients defining the initial Gaussian profile are given the following values

The ROM has been solved using MPOD=5 POD modes.

Subcases 4, 13, 17, 19, 21, and 22 show much smaller differences than the threshold difference ‖d‖T1. In all these subcases, the training sets include Sample 4, which is computed with the closest value above the target value. The smallest differences are obtained in Subcase 17, where the training set includes just Samples 3 and 4. The differences are increased if more samples are added to the training set, although the ROM continues to obtain good solutions, as shown in Figure 12.

The CPU times required by the FOM and the ROM are τFOMCPU=8.96⋅10−4 and τROMCPU=1.44⋅10−5, respectively, so the speed up is ×62.

Case 4. Parameters: Mtrain and MPOD

It is possible that the samples that compose the training set cannot be designed and that the training set is given in advance. In this case, it is necessary to study how to modify the parameters of the ROM which it is possible to access. With this objective, the training set has been built with ten samples in which the coefficients that define the initial Gaussian profile (4.4) have been given random values, as shown in Figure 13.

The time-space domain of the case is defined as (x,y,t)∈[0,20]×[0,20]×[0,10]. A Gaussian profile is defined as the IC

where the six coefficients ˉu, u0, cx, cy, x0, and y0 are treated as given random values, as shown in Figure 13. Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=100×100 volume cells and CFL=0.4. The diffusion coefficient is set as ν=0.01, and the advection velocity is set as a=(ax,ay)=(0.5,0.5). All the settings of the problem are kept fixed in all the following different cases and are shown in Table 11.

The training set has been designed to add samples one by one in increasing order, as follows:

In addition to this, the ROM has been solved using different values of the number of POD modes

Therefore, the subcases solved by ROM are the combination of all the values of these two parameters. That is, there are 100 subcases whose training sets are composed as shown in Figure 14. Each time the training set is returned to a training set consisting only of the first sample (every 10 subcases), three POD modes are added.

The differences in all the subcases can be seen in a single row in Figure 15, where the general tendency to decrease as the number of POD modes increases can be seen. In the last four data-sets (from Subcase 61 onwards), differences smaller than the threshold difference ‖d‖1 are reached. In other words, the ROM predicts good solutions whenever it uses at least 18 POD modes. This is best seen in the 2D representation shown in Figure 16a, where the red line indicates the threshold difference. It can be seen that just one training sample is never enough, since the error is always very big. However, at least four samples are needed in the training set when they have arbitrary values. From four samples onwards, and with a high number of POD modes, the differences remain at similar values (below the threshold error), for which the predictions are accurate.

As the number of POD modes used by the ROM increases, the computation times increase and, consequently, the speed up decreases, as shown in Figure 16b. Thus, it is desirable to use as few POD modes as possible to make the ROM faster than the FOM, but without losing accuracy in the predictions. Taking this into account, the optimal value of POD modes is around 18 POD modes, since it achieves three orders of magnitude of speed up and the accuracy of the solution is guaranteed.

Case 5. Parameter: velocity

In Case 2, it was concluded that it is necessary for the training set to contain a sample with a higher advection velocity than the target for the ROM to predict accurate solutions. In the case of a two-dimensional velocity field, this conclusion could be extended to cases where the target solution is transported in the same direction as the training samples (and its magnitude may vary). That is, the ratio ax/ay is kept constant. However, it is highly interesting to study what happens if the angle of the transport direction of the target solution is modified.

The training set is composed of a single sample, as indicated in Figure 17a, and the ROM is solved for different target values by modifying the transport direction (and its magnitude) as indicated in the same table, which lists the values of the study parameters

following the formulation indicated in 4.6. The diffusion parameter is given the following value ν=0.

The training and target values are also shown in Figure 17a, where the circular symmetry of the field can be observed. The target velocities of T1, T2, and T3 are equal in magnitude to the training sample but move away from it by 5º, 10°, and 20° on the circumference with centre at (0,0), respectively. The velocities of T5, T6, and T7 velocities are shifted by the same number of degrees but with a smaller magnitude. The velocities of T4 and T8 velocities are different in magnitude with respect to the training value, but they face in the same direction.

The time-space domain of the case is defined as (x,y,t)∈[0,2]×[0,2]×[0,2]. The IC is the same for all target solutions and is given by

and can be seen in Figure 17c. Free boundary conditions are considered.

The physical domain is discretized using Ix×Iy=150×150 volume cells and CFL=0.4. All the settings of the problem are shown in Table 13.

Regarding the results, the target velocities of T4 and T8 agree with the results obtained in Case 2. As shown in Figure 17b, the differences computed for T4 stayed at a very low level for the whole time simulation, predicting highly accurate solutions, whereas the differences of T8 grow as its result exceeds the final position reached by the training solution (since its velocity is lower). As for the cases in which the direction of transport is modified, it can be seen in Figure 17b how their differences ‖d‖1 are grouped according to the angle at which they vary, being smaller for smaller angles. Accordingly, the change in magnitude has hardly any impact on these differences.

In addition to this, the representations shown in Figure 18 were proposed to further test the predictive capability of ROMs. In this figure, the time evolution of the solutions at three different points in the domain is shown. The dashed lines represent the target solution predicted by the ROM, and the solid lines represent the test solution computed by the FOM as reference solution. In this case, the following three points lie on the diagonal:

i.e., for values of vx=vy. Therefore, the figures show how the test solutions decrease in magnitude as their velocities move off the diagonal. In other words, at the point (x3,y3), the tests T1 and T5 present a magnitude that is greater than that of T2 and T6; at T3 and T7, it even disappears.

As it can be seen in the figures, the ROM predictions tend to maintain approximately constant magnitudes and do not decrease enough to resemble the tests. This is unacceptable for the targets of T2 and T6 (for 10º of separation from the diagonal) and even more so for T3 and T7 (for 10º of separation from the diagonal), where there should be no magnitude. It is therefore desirable that the target velocities are not modified beyond 5º with respect to the training velocities. With this in mind, the training sample must be carefully designed so as not to introduce an error that deviates from the expected physics, as shown in Case 6.

The ROM has been solved using 20 POD modes, requiring a CPU time of τROMCPU=5.27⋅10−4. Since the CPU time required by the FOM is τFOMCPU=6.64⋅10−1, the speed up achieved is ×1268.

4.2. Parametrized 2D WP model

A series of numerical results are presented below in which the parameterized fire model is solved taking into account the conclusions obtained from the results of the linear equation.

Case 6. Parameter: velocity

The parameter studied in this case is the wind velocity, as indicated in order in the following equations:

These parameters are given the values shown in Figure 19, where the training velocities are grouped into two different magnitudes, i.e., 0.5 and 1. Within each group, the velocities are separated by 10° in circular symmetry, so that the four samples closest (in blue) to the test velocity (in red) are chosen to carry out the training. The rest of the parameters of the wildfire model (2.5) are given the following fixed values.

The time-space domain of the case is defined as (x,y,t)∈[0,200]×[0,200]×[0,100]. The IC for the temperature and the fuel mass fraction are given by

Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=150×150 volume cells and CFL=0.4. All the settings of the problem are shown in Table 15, including the number of POD modes MPOD and the number of snapshots per time window MSTW.

Figure 20 shows the test solutions of T and Y at the final time, and Figure 21 shows the solutions predicted by the ROM at different time instants. In Figure 21c, f, it can be clearly seen that the solution predicted by the ROM is made up of the training solutions, because their shadows are superimposed. Because of this, the wavefront, which is very well delimited in the test case, as shown in the Figure 20, is very blurred in the ROM case.

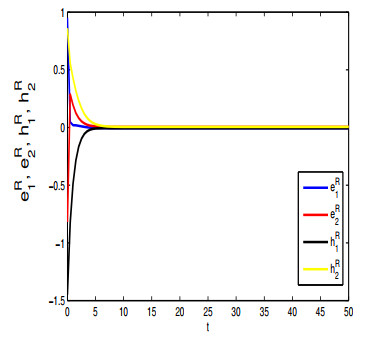

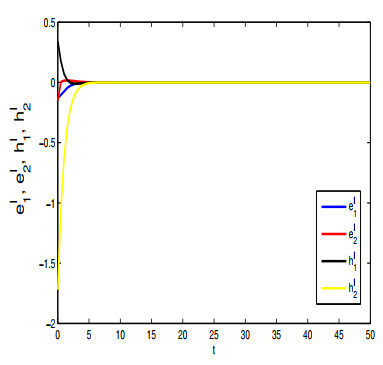

Nevertheless, the evolution of the solution at the three gauging points

shows an acceptable agreement between the two solutions, as can be seen in Figure 22b. The closer the predicted solution is to the origin of the IC, the more aligned it is with the test solution. However, as it evolves, it becomes out of phase. This can be seen in the Figure 22, which shows the time evolution of the absolute value of the position at which the maximum is located. In this figure, it can be seen that up to t=40, the slope of the solution predicted by the ROM (red) overlaps with the test slope (blue); however, for longer times, it ends up tending to the closest training solution (gray).

The ROM has been solved using 30 POD modes with 100 snapshots per time window. The CPU time required by the FOM and the ROM are τROMCPU=1.6 and τROMCPU=7.70⋅10−2, respectively, so the speed up achieved is ×21.

Case 7. Parameter: Diffusion coefficient

The parameter studied in this case is the diffusion coefficient

This parameter is given the values indicated in Table 16, where the two training values and the target value are found. The rest of the parameters of the model are given the fixed values indicated in Table 17.

The time-space domain of the case is defined as (x,y,t)∈[0,200]×[0,200]×[0,100]. The IC for the temperature and the fuel mass fraction is given by (4.8). Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=150×150 volume cells and CFL=0.4. All the settings of the problem are shown in Table 18, including the number of POD modes MPOD and the number of snapshots per time window MSTW.

The time evolution of the solution compared at the three gauging points located in (4.9) reveals a good correspondence between the solution predicted by the ROM and the test solution, considering that the ROM takes a little longer to lower the temperature, as can be seen in the tails of each point. This confirms the conclusions obtained with the linear equation, since it is possible to train the ROM with only two values for the diffusion coefficient and predict accurate solutions, even using a training range as large as the one used in this case, ranging from 0.01 to 10. The ROM has been solved using 10 POD modes and 153 snapshots per time window, so the CPU time required by the FOM to compute the test solution and by the ROM to predict the target solution is τFOMCPU=9.71 and τROMCPU=9.30⋅10−2, respectively, and the corresponding speed up is ×105.

Case 8. Parameter: Pre-exponential factor

The parameter studied in this case is the pre-exponential factor

This parameter is given the values indicated in Table 19, where the two training values and the target value are found. The rest of the parameters of the model are given the fixed values indicated in Table 20.

The time-space domain of the case is defined as (x,y,t)∈[0,200]×[0,200]×[0,100]. The IC for the temperature and the fuel mass fraction is given by (4.8). Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=150×150 volume cells and CFL=0.4. All the settings of the problem are shown in Table 21.

The results predicted by the ROM closely resemble the test results, as confirmed by the time evolution of the solution at the gauging points (4.9) in Figure 24b. The ROM is solved using 15 POD modes and 50 snapshots per time window. The CPU time required by the FOM to compute the test solution and by the ROM to predict the target solution is τFOMCPU=1.31 and τROMCPU=5.10⋅10−2, respectively, and the corresponding speed up is ×26.

Case 9. Parameter: Reaction coefficient

The parameter studied in this case is the reaction coefficient

This parameter is given the values indicated in Table 22, where the two training values and the target value are found. The rest of the parameters of the model are given the fixed values indicated in Table 23.

The time-space domain of the case is defined as (x,y,t)∈[0,200]×[0,200]×[0,100]. The IC for the temperature and the fuel mass fraction is given by (4.8). Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=150×150 volume cells and CFL=0.4. All the settings of the problem are shown in Table 24.

The results predicted by the ROM achieved high accuracy with respect to the test solution. The time evolution of the solution at the gauging points (4.9) confirms that only two training samples allow the ROM to predict accurate solutions when changing the reaction coefficient. The ROM has been solved using 15 POD modes and 80 snapshots per time window. The CPU time required by the FOM to compute the test solution and by the ROM to predict the target solution is τFOMCPU=1.31 and τROMCPU=2.90⋅10−2, respectively, and the corresponding speed up is ×45.

Case 10. Parameter: Shape of the IC

In this test case, the parameters that are studied belong to the IC. As concluded in the test cases solved with the linear equation, the shape of the IC can be arbitrarily modified, but its position presents more difficulties when it comes to being included in the ROM prediction. Because of this, in this case, we consider only the IC parameters related to its shape, which can be given by

where

are the parameters of study. These are given the values contained in Table 25 together with the target values. The training ICs and the target IC are shown in Figure 26. The parameters that define the model (2.5) are given fixed values as shown in Table 26.

The time-space domain of the case is defined as (x,y,t)∈[0,200]×[0,200]×[0,100]. Free boundary conditions are considered. The physical domain is discretized using Ix×Iy=150×150 volume cells and CFL=0.4. All the settings of the problem are shown in Table 27.

The ROM is able to predict accurate solutions when the values that define the shape of the IC are changed. The time evolution of the solution at the gauging points (4.9) confirms this conclusion, since the ROM resembles the test even though the maximums are not as high as they should be (Figure 27). The ROM has been solved using 15 POD modes and 80 snapshots per time window. The CPU time required by the FOM to compute the test solution and by the ROM to predict the target solution is τFOMCPU=1.28 and τROMCPU=3.00⋅10−2, respectively, and the corresponding speed up is ×43.

5.

Concluding remarks

A methodology has been proposed to solve the parametrized versions of the ADR equation (2.1) and the WP model (2.5) using POD-based ROMs that allows to predict solutions for values of the problem parameters other than the training ones.

Several numerical cases have been solved to perform a very detailed analysis of the ability of this parametrized ROM strategy, which are summarized in Table 28. In the first five cases, it has been applied to the 2D ADR equation (2.1), where solutions for different values of the advection velocity and the diffusion coefficient have been accurately predicted. Other input parameters succesfully considered are the coefficients defining the initial condition. The reduced version of the 2D WP model (2.5) needs definition of time windows to linearize the nonlinear coupling term. The parametrized ROM strategy is also applied to this model for certain physical parameters, such as the diffusion coefficient, the pre-exponential factor, and the reaction coefficient.

The particular conclusions obtained for the different parameters studied are summarized below. The ROM is able to predict solutions for new values of the diffusion and reaction coefficients if at least one training value is lower and other is higher than the target, as shown in Cases 1, 7, and 9. As for the advection velocity, it has been noted in Case 2 that when having an arbitrary set of training samples, it has to contain at least one with a velocity value as close as possible to the target and above it. In Cases 5 and 6, training samples with circularly distributed velocity values have been proposed, and it has been observed that a separation of 5° between the training and the target values allows the prediction of accurate solutions.

Given an arbitrary training set computed with random values of the parameters that define the IC, the ROM needs at least 18 POD modes (in 2D problems) and 4 training samples to predict accurate solutions with high speed up, as observed in Case 4. Regarding the values that define the IC, the ROM is able to predict solutions changing the offset or the amplitude of the initial profile when the training set is composed of at least two samples with arbitrary values, as shown in Case 3. Regarding the width of the initial profile, the ROM needs to be trained with samples computed for wider profiles than the target. Unlike the linear problem, the initial position is particularly difficult to predict when solving linearized ROMs, as shown in [41], such as the 2D WP model (3.8). It remains for future work to explore possibilities to overcome this limitation.

Use of AI tools declaration

The authors declare they have not used artificial intelligence (AI) tools in the creation of this article.

Acknowledgments

This work was supported by Project PID2022-137334NB-I00 funded by MCIN/AEI/10.13039/501100011033 and by European Regional Development Fund (ERDF) of the Spanish Ministry of Science and Innovation and the European Regional Development Fund. This work has also been funded by the Spanish Ministry of Science, Innovation and Universities – Agencia Estatal de Investigación (10.13039/501100011033) and Fondo Europeo de Desarrollo Regional (FEDER) under project-No. PID2022-141051NA-I00. It is also partially funded by the Government of Aragón, through the research grant T32_23R Fluid Dynamics Technologies. The authors belong and are supported by the Aragón Institute of Engineering Research (I3A) of the University of Zaragoza.

Conflict of interest

A. Navas-Montilla is a guest editor for this special issue and was not involved in the editorial review or the decision to publish this article. The authors declare there is no conflict of interest.

DownLoad:

DownLoad: