This review on materials for hydrogen storage in the solid state gives a brief discussion underlying reasons and driving forces of this specific field of research and development (the why question). This scenario is followed by an outline of the main materials investigated as options for hydrogen storage (the what exactly). Then, it moves into breakthroughs in the specific case of solid state storage of hydrogen, regarding both materials (where to store it) and properties (how it works). Finally, one of early model systems, namely NaBH4/MgH2 (the case study), is discussed more comprehensively to better elucidate some of the issues and drawbacks of its use in solid state hydrogen storage.

1.

Introduction

Research in neuroscience and psychology has shown that EEG signals can intuitively reflect an individual's emotional changes [1]. EEG signals are subject to individual differences and are non-stationarity [2], so the construction of a cross-subject emotion recognition model has become an important research direction and has great significance. This study focuses on exploring the emotions generated by the same emotion-inducing mode between subjects and cross-subjects, and conducts training based on cross-subjects' emotional characteristics, aiming to improve the emotion classification accuracy of the cross-subjects emotion recognition model [3].

Machine learning is the method of making computers with human intelligence, which requires training models to improve themselves by learning from data, a technique that is well suited to tasks such as processing electrical brain signals. Aljuhani et al. [4] used machine learning algorithms to identify emotions from speech, extracting various spectral features, such as the Mel-frequency cepstrum coefficient (MFCC) and mel spectrum, and obtained 77.14% accuracy by the SVM method. Liu and Fu [5] trained a support vector machine in emotion recognition and proposed a multi-channel feature fusion method. The recognition accuracy of different subjects ranged from 0.70 to 0.87, and the results of PLCC and SROSS measurements reached 0.843 and 0.789. Salido Ortega et al. [6] used machine learning technology to establish individual models, general models, and gender models to automatically identify subjects' emotions, which verified that their individual emotions are highly correlated with the situation. They used the situation data to realize automatic recognition of emotions in real situations. Karbauskaite et al. [7] studied facial emotion recognition, and combining four features made the emotion classification accuracy reach 76%. Xie et al. [8] proposed a transformer-based cross-mode fusion technology and blackmail network architecture for emotion estimation, and this multi-mode network architecture can achieve an accuracy of 65%. Li et al. [9] proposed a TANN neural network. Adaptive highlighting of transferable brain region data and samples through local and global attention mechanisms was used to learn emotion discrimination information.

Deep learning is a technique that combines low-level features to form more abstract high-level features or categories, so as to learn effective feature representations from a large number of input data and apply these features to classification, regression, and information retrieval. It is also applicable to the processing of EEG signals. Jiang et al. [10] established a 5-layer CNN model to classify EEG signals, and the average accuracy reached 69.84%, 0.79% higher than that of the CVS system. Zhang and Li [11] proposed a teaching speech emotion recognition method based on multi-feature fusion deep learning, and the recognition accuracy reached 75.36%. Liu and Liu [12] applied BP (back-propagation) neural network as Technical Support and Combines EEG Signals to Classify Criminal Psychological Emotions. Liu et al. [13] used the MHED dataset to study the multi-modal fusion network of video emotion recognition based on hierarchical attention, and the accuracy was 63.08%. Quan et al. [14] showed that interpersonal characteristics can help improve the performance of automatic emotion recognition tasks, and the highest accuracy at the titer level was 76.68%. Fang et al. [15] proposed a Multi-feature Deep Forest (MFDF) model to identify human emotions.

We employed the random forest (RF) classification model in the field of machine learning. As the integration of decision trees, RF is a classifier that uses multiple trees to train and predict samples. It has the advantages of being built easily, able to obtain the importance weight of features, and is less likely to overfit. Anzai et al. [16] used the machine learning random forest algorithm to build a fragile classifier and a descent classifier to identify the frail state and fall risk of the elderly, and the overall balance accuracy for the identification of frail subjects was 75% ± 0.04%. The overall balance accuracy for classifying subjects with a recent history of falls was 0.57 ± 0.05 (F1 score: 0.62 ± 0.04).

In the field of optimization classification model, related researchers have made great progress. Zhang et al. [17] used Bayesian super parameters to optimize the stochastic forest classifier on Sentinel-2 satellite image urban land cover classification. As a result, the RF after Bayesian optimization was 0.5% higher than RF by using RGB band features, and its accuracy increased 1.8% by using multi-spectral band features. Beni and Wang [18] proposed swarm intelligence in 1989. The probabilistic search algorithm built by simulating the swarm behavior of natural organisms was intelligent, because it was independent of the optimization problem itself, required fewer parameters, had high fault tolerance, and had strong stability. Ye et al. [19] adopted a genetic algorithm to optimize the decision tree combination in the parametric optimization random forest, comparing with the actual profit, the profit score of RFoGAPS increases by 7.73%.

In recent years, the swarm intelligence algorithm based on biological characteristics has been widely used in electronic information, engineering technology, biomedicine, and other fields. Sparse Bayesian Learning for end-to-end spatio-temporal-filtering-based single-trial EEG classification (SBLEST) optimized spatio-temporal filters and the classifier simultaneously within a principled sparse Bayesian learning framework to maximize prediction accuracy [20,21]. Since feature extraction and emotion classification were completed independently at different stages in the EEG decoding process, and the research aimed to reduce the cost generated in the classification process, we put forward an optimization method that can dynamically optimize the parameters of RF model, which can improve the accuracy. At the same time, the intelligent optimization algorithm we sought should be as simple in structure as possible, easy to implement, and with few control parameters, so we selected the Sparrow Search Algorithm (SSA). We applied the SSA to optimize the key parameters of RF and improve the classification accuracy in cross-subject emotion recognition.

SSA-RF was used on the DEAP and SEED datasets, which verified that it had better adaptability, effectiveness, necessity of classification model parameters, and reduced subject dependency.

2.

Methods

2.1. Preprocessing

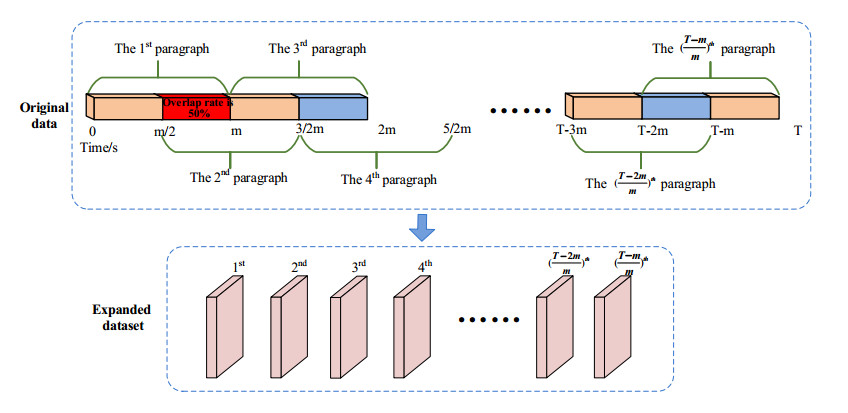

Windowing is employed to avoid overfitting which is caused by small data. For data of T $ \left(\mathrm{s}\right) $, the time window is $ \mathrm{m}\left(\mathrm{s}\right) $, and the overlap rate is 50%. The principle of windowing is shown in Figure 1.

2.2. Feature extraction

Feature extraction is one of the crucial processing components of cross-subject emotion recognition, which can mine the hidden information of mental activity and cognitive function.

Different emotional features are reflected in different physical quantities of signals. The wavelet transform is effective in finding the optimal trade-off between time and frequency resolution. Traditional features are extracted from the time-domain, frequency-domain, and time-frequency domains [22]. Soroush et al. [23] obtained good classification accuracy by applying the characteristics of mean, skewness, and Shannon entropy. The research motivation comes from the combinations of different features [24] or principal component analysis and discrete wavelet transform for feature selection [25].

In this paper, 9 features of time domain, 2 features of frequency domain, and 1 feature of time-frequency domain are extracted for SSA-RF cross-subject emotion recognition. We used all channels, which can provide more information.

2.2.1. Time domain features

In the time domain, the zero crossing rate (ZCR), standard deviation (SD), mean, root mean square (RMS), energy (Eng), skewness, approximate entropy (ApEn), sample entropy (SampEn), and Hjorth are extracted as the features of the EEG, which are shown in Table 1.

2.2.2. Frequency domain features

We transform the time domain EEG to the frequency domain through the Fast Fourier Transform (FFT), and the Power Spectral Density (PSD) and Differential Entropy (DE) are extracted as the features, which are shown in Table 2.

2.2.3. Time frequency domain feature

Due to the characteristics of both time and frequency domains, the time-frequency domain can comprehensively reflect the information of the EEG, which is a more comprehensive display of EEG feature information. After transforming the EEG into the time-frequency domain through the wavelet transform (WT), the wavelet shannon entropy (SE) is extracted as the feature, which provides uncertainty, information content, spectral characteristics, and time-frequency variation characteristics of the EEG, and reveals the correlation between the EEG signal and emotion so as to realize emotion recognition. The SE of the time-frequency domain can describe the information content and complexity of the signal at different times and frequencies, as shown in Eq (1):

Here, H(X) is the SE, and $ P\left(x\right) $ is the probability value of different sample data, in bits.

2.3. The principle of SSA-RF

SSA is used to obtain the optimal NDT and MNL of the RF dynamically, and the SSA is inspired by the foraging behavior of sparrows to obtain the optimal parameters.

According to the biological rules of the SSA, the discoverer first finds the optimal foraging area. Followers search for food in the area around the discoverers or obtain food from them. They may also engage in food plundering between individuals and update their foraging area. When the sparrows are aware of danger, they also update their foraging area to avoid being attacked by predators. Assuming there are n sparrows in d-dimensional space, X represents the position of the sparrow. The main responsibility of discoverers is to find food for the population and guide their followers in the foraging direction. According to this rule, the location of the discoverer is updated as described in Eq (2):

Here, $ {i}_{termax} $ is the maximum number of iterations, t is the current number of iterations, $ {X}_{ij} $ is the position information of the sparrow i in the j dimension, $ {R}_{2} $ and $ {S}_{T} $ are the warning and safety values respectively, Q and $ \alpha (\alpha \in \left(\left.0, 1\right])\right. $ are the random numbers, Q follows a normal distribution, and L is a matrix with all elements being $ 1\times d $. When $ {R}_{2} < {S}_{T} $, there are no predators in the foraging environment, and the discoverer can conduct a safe and extensive search. When $ {R}_{2}\ge {S}_{T} $, some sparrows confirm the presence of predators and issue an alert, and all the sparrows need to move to the feeding area in a timely manner.

The position update of followers is described in Eq (3):

Here, $ {X}_{P}^{} $ is the optimal position occupied by the current discoverer, and $ {X}_{worst} $ is the current global worst position, A is a matrix of $ 1\times d $, with elements randomly assigned to 1 or -1, and $ {A}^{+} = {A}^{T}{\left(A{A}^{T}\right)}^{-1} $. When $ i > n/2 $, The follower i is in a state of hunger, whose fitness is reduced, and in order to find food, it needs to change areas for foraging.

When aware of danger, the sparrow population will engage in anti-predatory behavior, as described in Eq (4):

Here $ {f}_{i} $ is the fitness value of the current sparrow individual, $ {f}_{g} $ and $ {f}_{w} $ are the current global best and worst fitness, $ {X}_{best}^{} $ is the current global optimal position, $ \beta $ represents the wavelength control parameter, which is a random number subject to a standard normal distribution (mean 0, variance 1), $ K(K\in \left[-\mathrm{1, 1}\right]) $ is a random number representing the direction of sparrow movement, and $ \epsilon $ is the smallest constant to avoid a denominator of 0.

When $ {\mathrm{f}}_{\mathrm{i}} > {\mathrm{f}}_{\mathrm{g}} $, sparrows are in a hazardous area and can be easily spotted or attacked by natural predators.

When $ {\mathrm{f}}_{\mathrm{i}} = {\mathrm{f}}_{\mathrm{g}} $, sparrows realize that they are currently in a dangerous position, and in order to avoid being attacked by predators, they need to move closer to the sparrows in the safe area to reduce the likelihood of predation. The implementation of SSA-RF is shown in Table 3.

In SSA-RF, the fitness function is used to search for the optimal number of DTN and LMN. The classification error of the training and testing sets is used as the fitness. After the model training is completed, the optimal position of the sparrow population is output, corresponding to the optimal number of DTN and LMN in the RF, Finally, the optimization results were incorporated into the RF for experimentation, which summarized the complete process of SSA-RF optimization parameters. The flowchart of SSA-RF algorithm is shown in Figure 2.

3.

Experimental results and analysis

3.1. Datasets and parameters

3.1.1. Datasets

The DEAP dataset was established by Koelstra et al. [26] from Queen Mary's College London, which included multi-channel physiological signals, facial expression videos, and emotional self-evaluation labels established using the SAM (Self Assessment Manikins) table. It collected EEG data from 32 healthy subjects (16 males and 16 females), with the first 32 channels of EEG.

The SEED dataset was established by the BCMI laboratory, which recorded the EEG of 15 subjects (including 7 males and 8 females), with an average age of 23.37 years old. Each group had 15 experiments, each consisting of 5 seconds of suggestion before the start, 4 minutes of movie clips, 45 s of self-evaluation, and 15 s of rest. The emotion-inducing materials consist of 15 segments from six movies. After watching the videos, participants recorded their emotional reactions by filling out questionnaires, which were divided into three types: positive emotions, neutral emotions, and negative emotions.

The data formats of the two datasets were shown in Table 4.

3.1.2. Parameters of SSA-RF

The parameters of SSA-RF were population number, maximum number of iterations, dimension, upper boundary, and lower boundary. These parameter values are shown in Table 5.

3.2. Preprocessing

For the DEAP dataset, it was composed of participants collecting EEG signals while being emotionally induced, then the participants labeled the label size by watching video through their personal subjective emotions.

The baseline signal mean was removed during baseline processing [27]. The pre-processed data were augmented by windowing. There were 40 sets of data from 40 emotion-inducing videos of each subject, each of which lasted 60 s. These data were processed with a windowing of 10 s and a 50% overlap rate, each video was reconstructed into 440 video data of 10 s. Each video sample had a duration of 10 s, a data sampling rate of 128 Hz, and 32 unaltered channels. Therefore, the amount of data per sample for a single channel was 1280. The original data was reconstructed from 40 × 32 × 7680 to 40 × 32 × 14,080.

For the SEED dataset, it was based on the premise of determining the label to which the emotion-evoking material belongs. In the stage of preprocessing, all data of each subject was integrated and reconstructed, and the original data format of 15 × 3 × 62 × M was reconstructed into a format of 225 × 186 × M. The dataset had a total of 15 subjects, and each subject had 15 segments of emotional stimulation materials. All emotional stimulation material data was unified into 15 × 15, so the first dimension was 225, Each subject was separated for a period of time to perform the same experiment 3 times, each experiment collected 62 channels of EEG signals, and the second dimension was 3 × 62, which was 186, M was the amount of data in a single channel of each trial, because each test was not the same time duration, so M was in the range of 37,001–53,001. The original data was reconstructed from 15 × 3 × 62 × M to 225 × 186 × M.

3.3. Cross-subject emotion recognition on the DEAP dataset

We conducted 15 randomized grouping experiments. The 25 subjects were randomly selected as the training set, and the other 7 people were selected as the test set for 30 experiments, and 20 iterations were carried out in each experiment.

A total of 20 features were extracted: ZCR, SD, Mean, RMS, Eng, Skew, ApEn, SampEn, Hjorh, PSD, and DE of five frequency bands ($ \mathrm{\delta } $, $ \mathrm{\theta } $, $ \mathrm{\alpha } $, $ \mathrm{\beta } $, and $ \mathrm{\gamma } $), and SE in the time-frequency domain. We performed many experiments of different feature combinations, and selected the top 8 combinations with high accuracy. There are shown as follows:

Combination 1: All features of the composite domain (20 features);

Combination 2: ZCR, SD, Mean, RMS, Eng, Skewness, ApEn, SampEn, PSD, DE, SE;

Combination 3: SD, RMS, Eng, PSD-δ, DE-δ, DE-β, DE-γ;

Combination 4: F-all, SE, and Hjorth;

Combination 5: Mean, SampEn, DE-β, DE-γ, PSD-β, PSD-γ, SE;

Combination 6: SD, Mean, RMS, Eng, Skewness, Apen, SampEn, DE-α, DE-β, DE-γ, PSD-α, PSD-β, and PSD-γ;

Combination 7: ZCR, SD, Mean, RMS, Eng, Skewness, ApEn, SampEn, DE-α, DE-β, DE-γ, PSD-α, PSD-β and PSD-γ;

Combination 8: SD, Mean, RMS, Eng, and F-α, β, γ;

The value of parameters of RF were generally based on empirical data, and the empirical values of DTN and MLN were 30 and 1, respectively, but they were not suitable for each type of data. We applied SSA algorithm to automatically search for optimal parameters (DTN and MLN) of RF for the 8 combinations, and the optimal values of DTN and MLN are shown in Table 6.

It can be seen from Table 6 that the optimal parameter values of different feature combinations were different, and they were different from the empirical values, DTN especially showed significant differences. To test which feature combination can achieve the highest accuracy, we experimental with 100 epochs for each feature combination based on the DEAP dataset.

Figure 3 shows the violin plots of the accuracy for different combinations. The median accuracy of combination 3 was higher than the others, and the median accuracy of combination 8 was the lowest. On the whole, the accuracy of each combination was in the range of 72–81%.

The experimental results of SSA-RF on the DEAP dataset showed the accuracy of the test set was improved compared with RF. The classification results and improvement amount are shown in Figure 4 and Table 7.

From Table 7 and Figure 4, it could be seen that the accuracy of SSA-RF was higher than RF on each feature combination, with an average improvement of 1.62%. Among them, combination 1 had the highest improvement, which was 3.65%, while combination 3 had the highest accuracy with a growth of 3.02%. Combination 3 was selected as the optimal feature combination.

Then, we analyzed the misjudged subjects based on combination 3. Subject 15 was used for analysis that misjudged negative emotions as positive emotions. We compared the features of combination 3 (SD, RMS, Eng, PSD-δ, DE-δ, DE-β, DE-γ) with the mean of the same features in the training set, as shown in Figure 5.

From Figure 5, it could be seen that the SD and RMS of subject 15 showed significant differences from the mean of the same features of the training set. When SDmean = 11.75 and SD15 = 87.27, ΔSD ≈ 75.52. When RMSmean = 16.13 and RMS15 = 79.8, ΔRMS ≈ 63.69. Other feature values of subject 15 were also higher than the mean of the same features of training set. This indicated that subject 15 exhibited significant individual differences in the dataset, and was the reason why it was misjudged. The subject of individual differences should be included in the training set for training SSA-RF to obtain the better generalization ability.

3.4. Cross-subject emotion recognition on SEED dataset

We conducted 15 randomized grouping experiments, 12 subjects were randomly selected as the training set, the other 3 people were selected as the test set for 30 experiments, and 20 iterations were carried out in each experiment.

A total of 18 features were extracted: ZCR, SD, Mean, RMS, Eng, Skewness, Hjorth, PSD and DE of five frequency bands ($ \mathrm{\delta } $, $ \mathrm{\theta } $, $ \mathrm{\alpha } $, $ \mathrm{\beta } $, and $ \mathrm{\gamma } $), and SE in the time-frequency domain. We performed many experiments of different feature combinations, and selected the top 8 combinations with high accuracy. These are shown as follows:

Combination 1: All time domain features;

Combination 2: All;

Combination 3: RMS, Eng, PSD-δ, DE-δ, DE-β, DE-γ;

Combination 4: DE-β, DE-α, PSD-β, PSD-α;

Combination 5: Eng, Skewness, Hjorth, PSD;

Combination 6: T-all and F-all;

Combination 7: DE-θ, DE-δ, PSD-θ, PSD-δ;

Combination 8: T-all and PSD;

The parameters value of RF were generally based on empirical evidence, and the empirical values of DTN and MLN were 30 and 1, respectively, but they were not suitable for each type of data. We applied SSA to automatically search for optimal parameters (DTN and MLN) of RF of the 8 combinations, and the optimal values of DTN and MLN were shown in Table 8.

Since multiple experiments were performed for each feature combination of the SEED dataset, the results of each experiment were recorded and statistically analyzed to draw a violin plot as shown in Figure 6.

It can be seen from the violin plot the accuracy corresponding to the feature combinations of the SEED dataset. The accuracy of the combination 1 was significantly higher than that of the other 7 combinations, and the accuracy of each combination was in the range of 65–93%, whose numerical span was larger than the DEAP dataset.

The experimental results of SSA-RF in the SEED dataset indicated that the accuracy of training set was close to 100%. The accuracy and improvement of the three classifications in the test set are shown in Figure 7 and Table 9.

From Figure 7 and Table 9, it can be concluded that SSA-RF had a better optimization effect on the SEED dataset than the DEAP dataset. It can be seen that the accuracy of SSA-RF was higher than RF on each feature combination, with an average improvement of 9.85%. Among them, the accuracy of all time-domain feature combinations was 82.58%, with an improvement of 9.25%.

For the misjudgment analysis of the discrimination results of combination 1 (All time domain features) in the SEED dataset, we extracted the feature data of combination 1 for subject 1 when misjudging (misjudging positive emotions as negative emotions), and compared it with the mean of those in the training set, as shown in Figure 8.

From Figure 8, it can be seen that the ZCR and SD of subject 1 shows significant differences compared with the mean of those in the training set.

When ZCRmean = 6930.1, ZCR1 = 218,213.6, which was almost a thirty-fold difference. Meanwhile, when SDmean = 2880.4, SD1 = 206,475.2, and the other feature values of subject 1 were also higher than the mean of those in the training set. Therefore it could be seen that subject1 exhibited significant individual differences in this dataset. For this reason, its accuracy was lower. Subsequent work needs to include subject 1 in the training set to train SSA-RF to obtain better generalization ability.

3.5. Comparison of similar methods

In the course of our research, we compared with particle swarm optimization algorithm (PSO), whale algorithm (WOA), and genetic algorithm (GA) with SSA algorithm, applied to the DEAP dataset. The experimental results are shown in Table 10.

To sum up, SSA has the best effect compared to similar algorithms.

4.

Discussion

We compared the findings of this paper with previous research, the average accuracy was based on 100 epochs, and the results of the comparison are shown in Table 11.

We compared SSA-RF and the relevant references from the past 7 years in Table 11. The average accuracy of our method was 76.81%, which was higher than the others the on the DEAP dataset, The average accuracy of our method was 75.96%, which was higher than the others on the SEED dataset. SSA-RF improved the accuracy of cross-subject emotion recognition.

5.

Conclusions

At present, there has been no research on SSA optimizing RFs in the field of emotion recognition based on EEG. This research demonstrated that SSA-RF can obtain better accuracy in cross-subject emotion recognition. After extracting the composite domain features of EEG signals, we conducted a variety of feature combination experiments. Through this method, we found the optimal parameters of RF, and the accuracy was significantly improved. For the DEAP dataset, the average accuracy was 76.81%, with a maximum accuracy of 77.57%, which was 1.61% higher than RF. For the SEED dataset, the average accuracy was 75.96%, with a maximum accuracy of 82.58%, which was 9.85% higher than RF.

The SSA-RF algorithm proposed in our research is applicable to the classification training of personal emotion models, solving the inefficiency problems of high time cost and low adaptability of setting model parameters manually. SSA-RF can be applied in practice, and it has certain theoretical and practical significance for the development of emotion recognition.

Factors affecting the accuracy or efficiency of cross-subject emotion recognition also include baseline processing methods and automatic optimization of feature combinations. Therefore, multi-baseline processing and automatic optimization of feature selection have important research significance.

Author contributions

Conceptualization and methodology, X.Z.; software, S.W. and X.Z.; formal analysis, X.Z. and S.W.; investigation, X.Z. and Y.S.; data curation, K.X.; writing-original draft preparation, X.Z., S.W.; writing-review and editing, X.Z. and K.X.; funding acquisition, X.Z. and R.Z. All authors have read and agreed to the published version of the manuscript.

Use of AI tools declaration

The authors declare they have not used Artificial Intelligence (AI) tools in the creation of this article.

Acknowledgments

This research was funded by National Natural Science Foundation of China, grant number 81901827, Natural Science Basic Research Program of Shaanxi province, grant number 2022JM-146, 2024 Graduate Innovation Fund of Xi'an Polytechnic University.

Informed consent statement

The data used in this research are DEAP and SEED dataset which are the public datasets.

Data availability statement

http://www.eecs.qmul.ac.uk/mmv/datasets/deap/; https://bcmi.sjtu.edu.cn/~seed/index.html.

Conflict of interest

The authors declare no competing interests.

DownLoad:

DownLoad: